Donating 50k. Lightcone's main projects – Lighthaven, Lesswrong, and websites for good causes – all seem very valuable for making AI go well. I would like there to be more AI safety events, better public discussion of AIS ideas, and more big comms efforts. I trust the Lightcone team a lot: they have good taste, are thoughtful and caring, and have interestingly different views on how to make AI go well from many other orgs I spend a lot of time around. I'd like to give them more financial security, so they can run their core products and have the freedom to try new things.

41

Lightcone Infrastructure

TL;DR: Lightcone Infrastructure, the organization behind LessWrong, Lighthaven, the AI 2027 website[1], the AI Alignment Forum, and many other things, needs about $2M to make it through the next year. Donate directly, send me an email, DM, signal message (+1 510 944 3235), or leave a public comment on this post if you want to support what we do. We are a registered 501(c)3 and are IMO the best bet you have for converting money into good futures for humanity.

We build beautiful infrastructure for truth-seeking and world-saving.

The infrastructure we've built over the last 8 years coordinates and facilitates much of the (unfortunately still sparse) global effort that goes into trying to make humanity's long-term future go well. Concretely, we:

build and run LessWrong.com and the AI Alignment Forum

build and run Lighthaven, a ~30,000 sq. ft. campus in downtown Berkeley where we host conferences, researchers, and various programs dedicated to making humanity's future go better

designed and built the websites for AI 2027, If Anyone Builds It, Everyone Dies, AI Lab Watch, and other public communication projects

act as leaders of the rationality, AI safety, and existential risk communities. We run conferences (less.online) and residencies (inkhaven.blog), participate in discussions on various community issues, notice and try to fix bad incentives, build grantmaking infrastructure, help people who want to get involved, and lots of other things.

In general, we try to take responsibility for the end-to-end effectiveness of these communities. If there is some kind of coordination failure, or part of the engine of impact that is missing, I aim for Lightcone to be an organization that jumps in and fixes that, whatever it is.

As far as I can tell, the vast majority of people who have thought seriously about how to reduce existential risk (and have evaluated Lightcone as a donation target) think we are highly cost-effective, including all institutional funders who have previously granted to us[2]. Many of those historical institutional funders are no longer funding us, or are funding us much less, not because they think we are no longer cost-effective, but because of political or institutional constraints on their giving. As such, we are mostly funded by donations from private individuals[3].

Additionally, many individuals benefit from our work, and I think there is a strong argument for beneficiaries of our work to donate back to us.

I am exceptionally proud of the work that we've achieved over the last decade. Knowing whether you are shaping the long run future of humanity in positive directions is exceptionally hard. This makes it difficult to speak with great confidence about our impact here. But it is clear to me we have played a substantial role in how the world is grappling with the development of smarter than human artificial intelligence, probably for the better, and I do not think there is any other organization I would rather have worked at.

This, combined with our unique funding situation in which most large funders are unable to fund us, creates a very strong case for donations to us being the most cost-effective donation opportunity you can find for helping humanity achieve a flourishing future.

This post will try to share some evidence around this question, focusing on our activities in 2025. This is a very long post, almost 15,000 words long. Please feel free to skim or skip anything that doesn't interest you, and feel free to ask any questions in the comments even if you haven't read the full post.

(For a longer historical retrospective, see last year's fundraiser post.)

A quick high-level overview of our finances

We track our finances in two separate entities:

Lighthaven, which is a high-revenue and high-cost project that roughly breaks even based on clients who rent out our space paying us (listed below as "Lighthaven LLC")

LessWrong and our other projects, which mostly don't make revenue and largely rely on financial donations to continue existing (listed below as "Lightcone Nonprofit")

In 2025, Lighthaven had about $3.6M in expenses (including our annual $1M interest payment on our mortgage for the property) and made about $3.2M in program revenue and so "used up" around $400k of donations to make up the difference.[4] Our other Lightcone projects (including LessWrong) used up an additional ~$1.7M. However, we also had to make 2024's $1M interest payment on Lighthaven's mortgage this year, which we had deferred from the previous year, and which was also covered from nonprofit donations.[5]

All in all this means we spent on-net $3.1M in 2025, which matched up almost perfectly with the donation revenue we received over the last year (and almost exactly with our projected spending for 2025). Thank you so much to everyone who made last year possible with those donations! Since we do not have a deferred interest payment to make this year, we expect that to maintain the same operations we need ~$2M in 2026 and are aiming to raise $3M.

You can explore our 2025 finances in this interactive Sankey diagram:

https://lwartifacts.vercel.app/artifacts/2025-fundraiser-sankey

If we fundraise less than $1.4M (or at least fail to get reasonably high confidence commitments that we will get more money before we run out of funds), I expect we will shut down. We will start the process of selling Lighthaven. I will make sure that LessWrong.com content somehow ends up in a fine place, but I don't expect I would be up for running it on a much smaller budget in the long run.

To help with this, the Survival and Flourishing Fund is matching donations up to $2.6M at an additional 12.5%![6] This means if we raise $2.6M, we unlock an additional $325k of SFF funding.

Later in this post, after I've given an overview over our projects, I'll revisit our finances and give a bit more detail.

A lot of our work this year should be viewed through the lens of trying to improve humanity's ability to orient to transformative AI.

The best way I currently know of how to improve the world is to help more of humanity realize that AI will be a big deal, probably reasonably soon, and to inform important decisions makers about the likely consequences of various plans they might consider.

And I do think we have succeeded at this goal to an enormous and maybe unprecedented degree. As humanity is starting to grapple with the implications of building extremely powerful AI systems, LessWrong, AI 2027, and Lighthaven are among the central loci of that grappling. Of course not everyone who starts thinking about AI comes through LessWrong, visits Lighthaven, or has read AI 2027, but they do seem among the most identifiable sources of a lot of the models that people use[7].

So what follows is an overview of the big projects we worked on this year, with a focus on how they helped humanity orient to what is going on with AI.

AI 2027

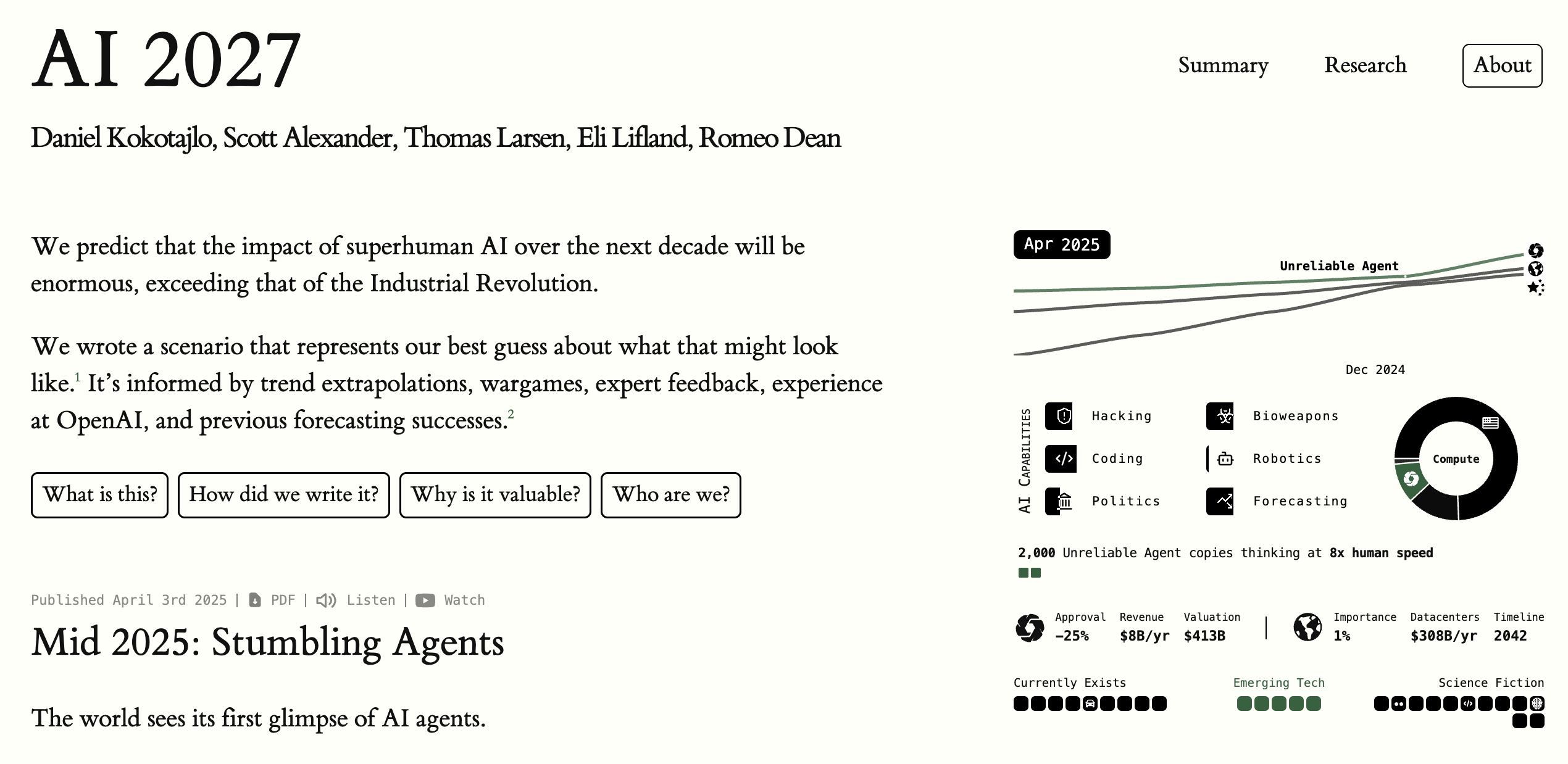

As far as I can tell, AI 2027 is the biggest non-commercially-driven thing that happened in 2025 in terms of causing humanity to grapple with the implications of powerful AI systems.

AI-2027.com homepage

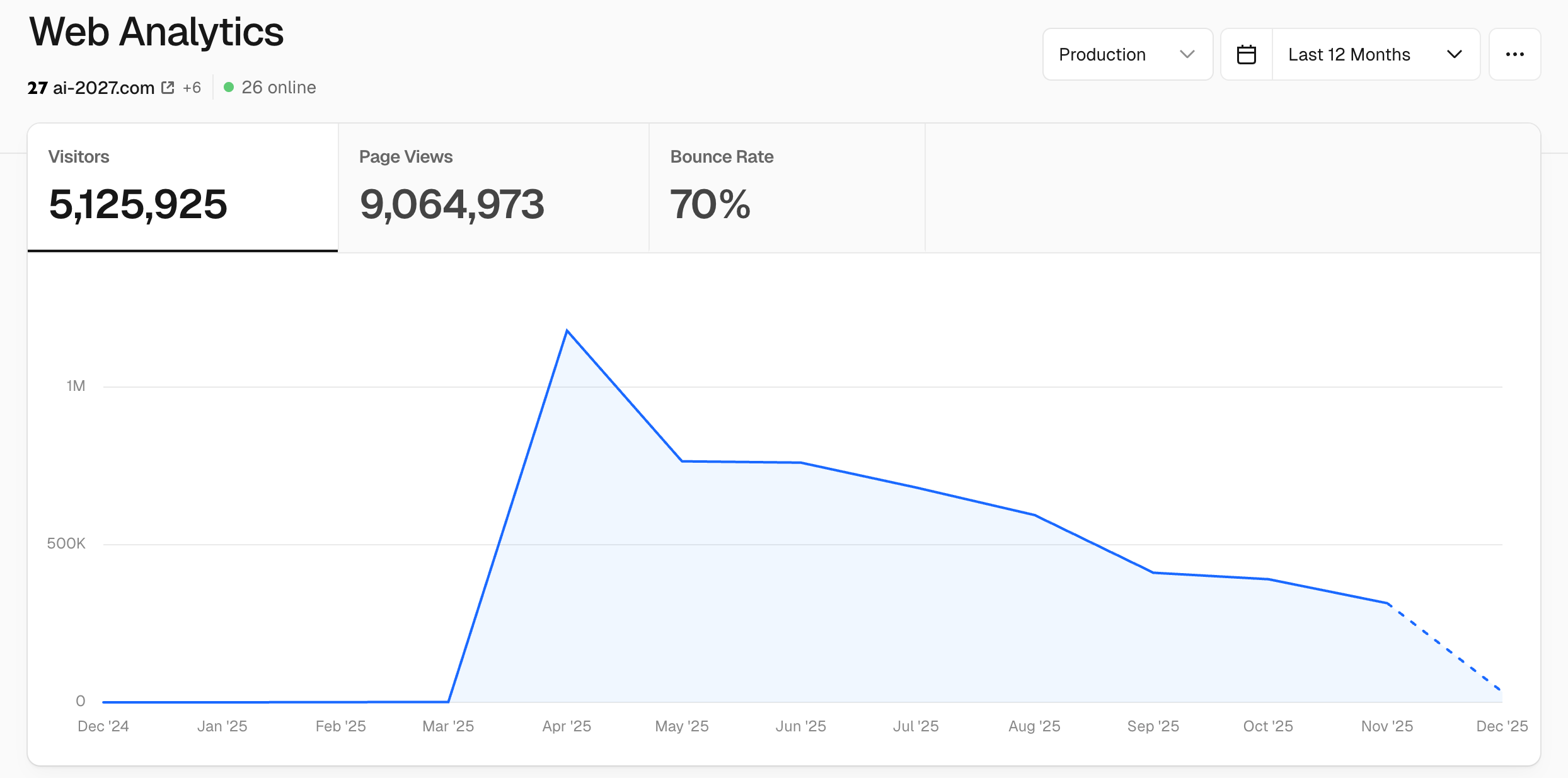

Published in April 2025, AI 2027 has been viewed more than 9 million times, and my best estimate is that secondary articles, videos and social media posts about the scenario have been read or watched by around 100 million people.

The two most popular YouTube videos covering AI 2027 have received ~9M and ~4M views, and the website has been featured in many hundreds of news stories, in over a dozen languages (see this spreadsheet for a sampling). The US vice president read it, and I know that many US federal government officials, advisors and analysts have read and engaged with it.

Now, have the consequences of all of that attention been good? My guess is yes, but it's certainly not obvious!

AI 2027 has been criticized for the aggressiveness of its timelines, which I agree are too short. I also have lots of other disagreements with the research and its presentation[8].

But despite these concerns, I still think that there is very little writing out there that helps you understand better what the future will look like. Concrete and specific forecasts and stories are extremely valuable, and reality is likely to end up looking much closer to AI 2027 than any other story of the future I've seen.

So I am glad that AI 2027 exists. I think it probably helped the world a lot. If there are worlds where governments and the public start taking the threat of powerful AI seriously, then those worlds are non-trivially more likely because of AI 2027.

But how responsible is Lightcone for the impact of AI 2027?

We worked a lot on the website and presentation of AI 2027, but most of the credit must go to the AI Futures team, who wrote it and provided all the data and forecasts.

Still, I would say Lightcone deserves around 30% of the credit, and the AI Futures team and Scott Alexander the other 70%[9]. The reach and impact of AI 2027 was hugely impacted by the design and brand associated with it. I think we did an amazing job at those. I also think our role would have been very hard to replace. I see no other team that could have done a comparably good job (which is evidenced by the fact that, since AI 2027 was published, we have had many other teams reach out to us asking us to make similar websites for them).

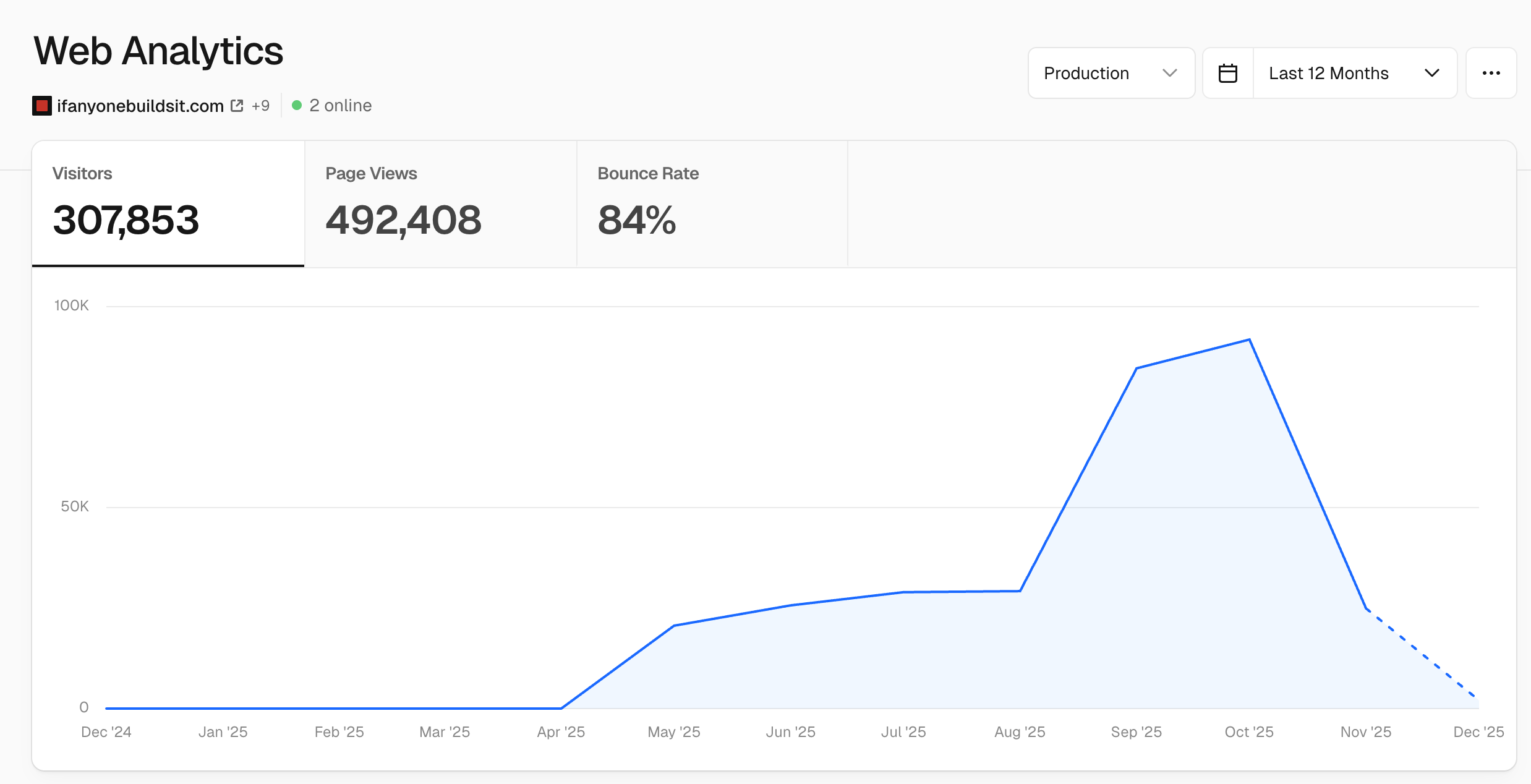

If Anyone Builds It, Everyone Dies

Partially caused by our success with AI 2027, we collaborated with MIRI on the launch of their book, If Anyone Builds It, Everyone Dies. This project didn't quite rise to the level of prominence of AI 2027, but it's also a higher fidelity message that emphasized many more of the points I care the most about.

As part of that launch, we worked on marketing materials and the website, as well as various (ultimately unsuccessful) attempts to convince the publisher to change the horrible cover.

Views of ifanyonebuildsit.com over time

My guess is our work here ultimately made less of a difference than our work on AI 2027, though I still think we helped quite a bit. The resources at ifanyonebuildsit.com provide many of the best answers to common questions about AI existential risk. Our branding work on the website and marketing materials contributed to the book achieving NYT bestseller status (if you forced me to put a number on it, I would say ~7% of the credit for the impact of the book goes to us, and the rest to MIRI)[10].

Deciding To Win

Homepage of decidingtowin.org

On October 27th, Simon Bazelon, Lauren Harper Pope, and Liam Kerr released Deciding to Win, a data-driven report/think-piece about charting a path for reforming the Democratic Party. It received millions of views on social media, and was covered by essentially every major publication in the US, including Vox, Politico, the New York Times, the Washington Post, the Associated Press, the New York Post, Semafor, The Atlantic, The Dispatch, Puck News, The Nation, Current Affairs, and PBS NewsHour. I have some hope it will end up as one of the most important and best things in US politics this year.

This is a weird project for Lightcone to be working on, so I want to give a bit of context on why we did. I don't generally think Lightcone should work much on this kind of political project[11]. Most reports in US partisan politics are close to zero-sum propaganda with the primary effect of increasing polarization.

But man, despite this domain being largely a pit of snakes, it is also clear to me that the future of humanity will, to an uncomfortable degree, depend on the quality of governance of the United States of America. And in that domain, really a lot of scary things are happening.

I don't think this fundraiser post is the right context to go into my models of that, but I do think that political reform of the kind that Deciding To Win advocates and analyzes (urging candidates to pay more attention to voter preferences, moderating on a large number of issues, and reducing polarization) is at the rare intersection of political viability and actually making things substantially better.

Lightcone built the website for this project on an extremely tight deadline within a 1-week period, including pulling like 3 all-nighters. The last-minuteness is one of the things that makes me think it's likely we provided a lot of value to this project. I can't think of anyone else around who would have been available to do something so suddenly, and who would have had the design skills and technical knowledge to make it go well.

The presentation of a report like this matters a lot, and the likely next best alternative for Deciding to Win would have been to link to an embedded PDF reader, destroying the readability on mobile and also otherwise ending up with much worse presentation. My guess is that Lightcone increased the impact of this whole project by something like 60%-70% (and naively we would deserve something like 35% of the credit).

I expect a world in which its recommendations were widely implemented would be a world with really quite non-trivially better chances of humanity achieving a flourishing future[12].

Note: The Deciding to Win team would like to add the following disclaimer:

Please note that our decision to contract with Lightcone Infrastructure for this project was motivated solely by our view that they were best-positioned to quickly build an excellent website. It does not imply our support or endorsement for any of Lightcone Infrastructure's other activities or philosophical views.

LessWrong

And of course, above all stands LessWrong. LessWrong is far from perfect, and much on this website fills me with recurring despair and frustration, but ultimately, it continues to be the best-designed truth-seeking discussion platform on the internet and one of the biggest influences for how the world relates to AI.

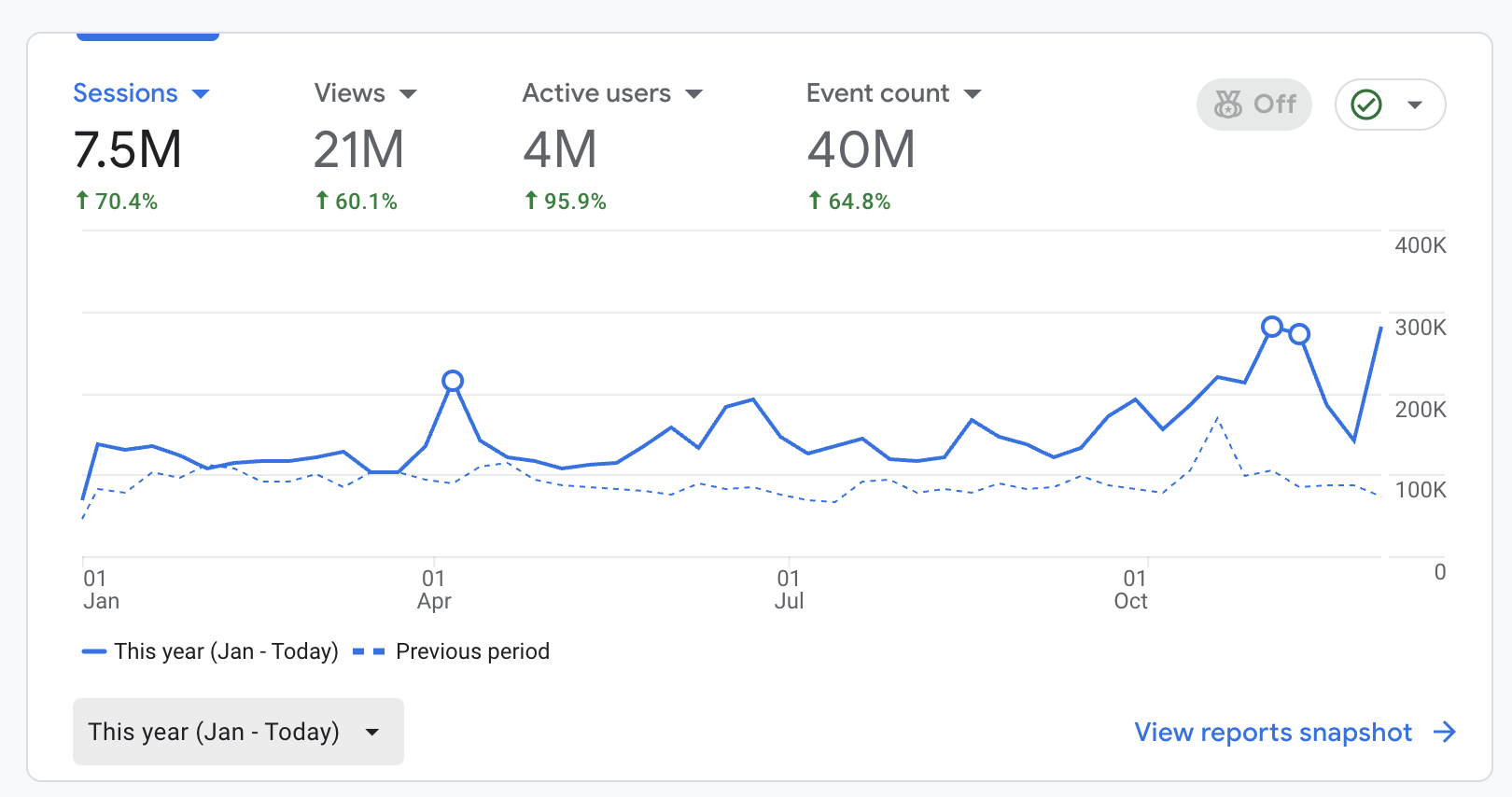

At least in terms of traffic and engagement statistics, 2025 has been our biggest year yet:

LessWrong Google Analytics statistics for 2025 vs. 2024

According to Google Analytics we've had almost twice as many users visit this year as previous years, and around 65% more activity in terms of sessions and views[13]. Other activity metrics are up as well:

https://lwartifacts.vercel.app/artifacts/metrics-2025

https://lwartifacts.vercel.app/artifacts/wordcounts-2025

But of course, none of this matters unless this exposure ultimately reaches people with influence over how the future goes and actually improves their decision-making.

In last year's fundraiser, I provided some evidence to this effect: Last year's analysis

I largely stand by this analysis. On the question of LessWrong influencing important ideas, we got a bunch of discussion of AI timelines as a result of AI 2027, lots of discussion on emergent misalignment as a result of various high profile research releases from frontier labs, and my sense is that much of the discussion of frontier AI regulation like SB1047, SB53 and the EU AI Act ended up being substantially influenced by perspectives and models developed on LessWrong.

On the question of LessWrong making its readers/writers more sane, we have some evidence of AI continuing to be validated as a huge deal, at least in terms of market returns and global investment. Overall the historical LessWrong canon continues to be extraordinarily prescient about what is important and how history will play out. It has continued to pay a lot of rent this year.[16]

The question of LessWrong causing intellectual progress feels like something we have not gotten a huge amount of qualitatively new interesting evidence on. I do think a lot of good work has been produced on LessWrong in the past year but my guess is if someone wasn't convinced by the previous decade of ideas, I doubt this year is going to convince them. Nevertheless, if you want a sampling yourself, I do recommend checking out the forecasted winners of the 2025 review:

How did we improve LessWrong this year?

The three biggest LessWrong coding projects (next to a long list of UI improvements and bugfixes and smaller features you can check out on our Github) were...

a big backend refactor,

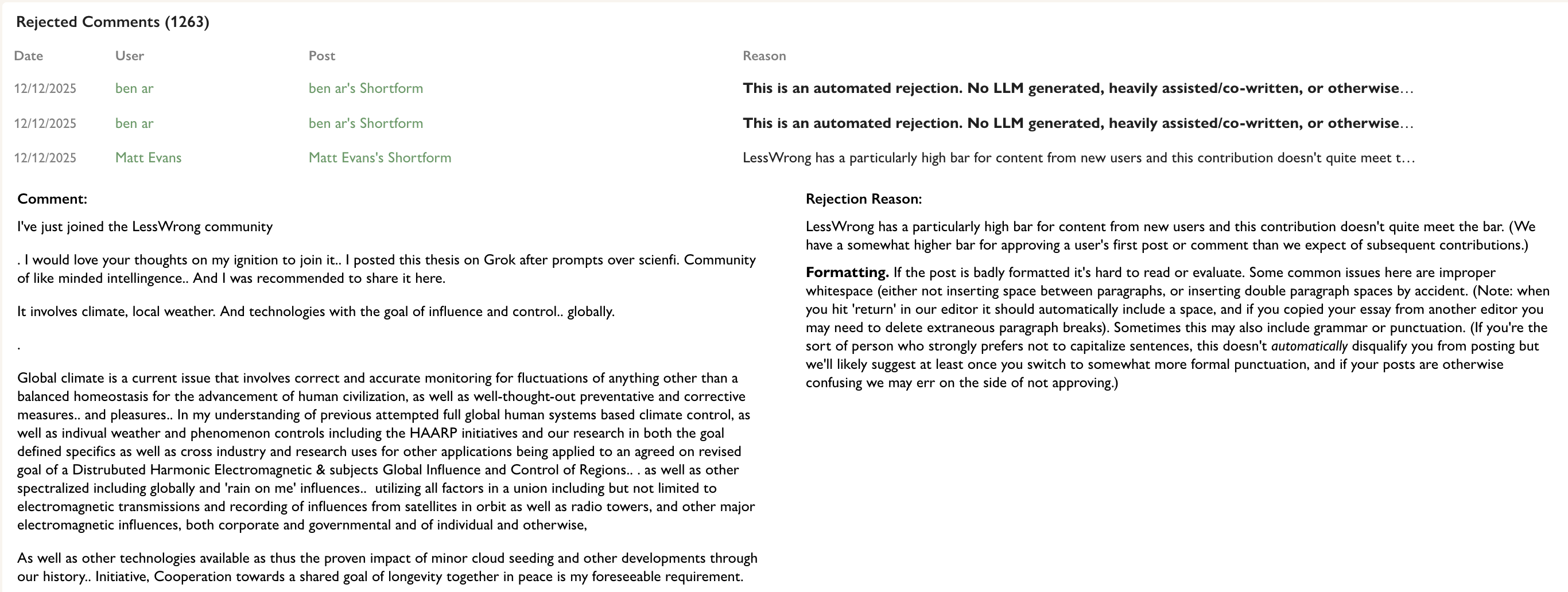

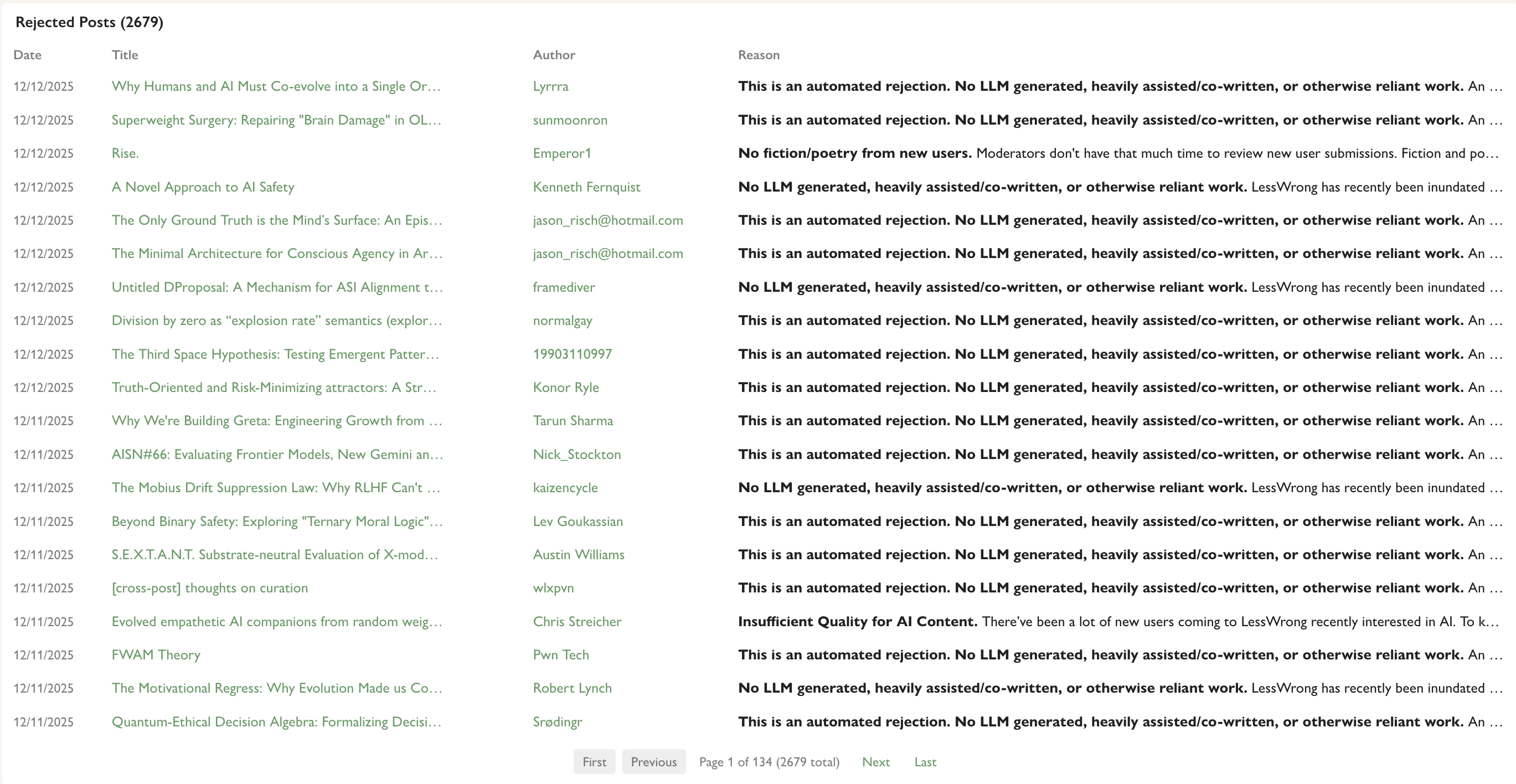

an overhaul over our moderation tools to deal with the ever-increasing deluge of submissions of AI-generated content on LessWrong,

the LessWrong frontpage feed.

And then the central theme of our non-coding work has been moderation. I spent a lot of time this year thinking and writing about moderation (including some high-profile ban announcements), and as the amount of new content being posted to the site has more than tripled, much of it violating our AI content policy, the workload to enforce those policies has gone up drastically.

Backend refactor

The LessWrong codebase has for a while been buckling under its own weight. This wasn't a huge problem, but we noticed that some of the technical debt posed particularly big challenges for coding assistants trying to navigate the codebase. Also, it meant certain targets I wanted to hit for performance were very hard to achieve.

So we fixed it! We despaghettified much of our codebase, and moved LessWrong to use the same framework as all of our other coding projects (Next.JS) and now performance is faster than it's ever been before, and coding agents seem to have much less trouble navigating our codebase, even accounting for how much smarter they've become over the course of the year. That said, this choice was controversial among my staff, so may history tell whether this was worth it. It did cost us like 8 staff months, which was pretty expensive as things go.

Overhauling our moderation tools

If you want to get a snapshot of the experience of being an internet moderator in 2025, I encourage you to go to lesswrong.com/moderation and check out the kind of content we get to sift through every day:

While I am glad that the language models seem to direct hundreds to thousands of people our way every day as one of the best places to talk about AI, they unfortunately are also assisting in sending huge mountains of slop that we have to sift through to make sure we don't miss out on the real humans who might end up great contributors to things we care about.

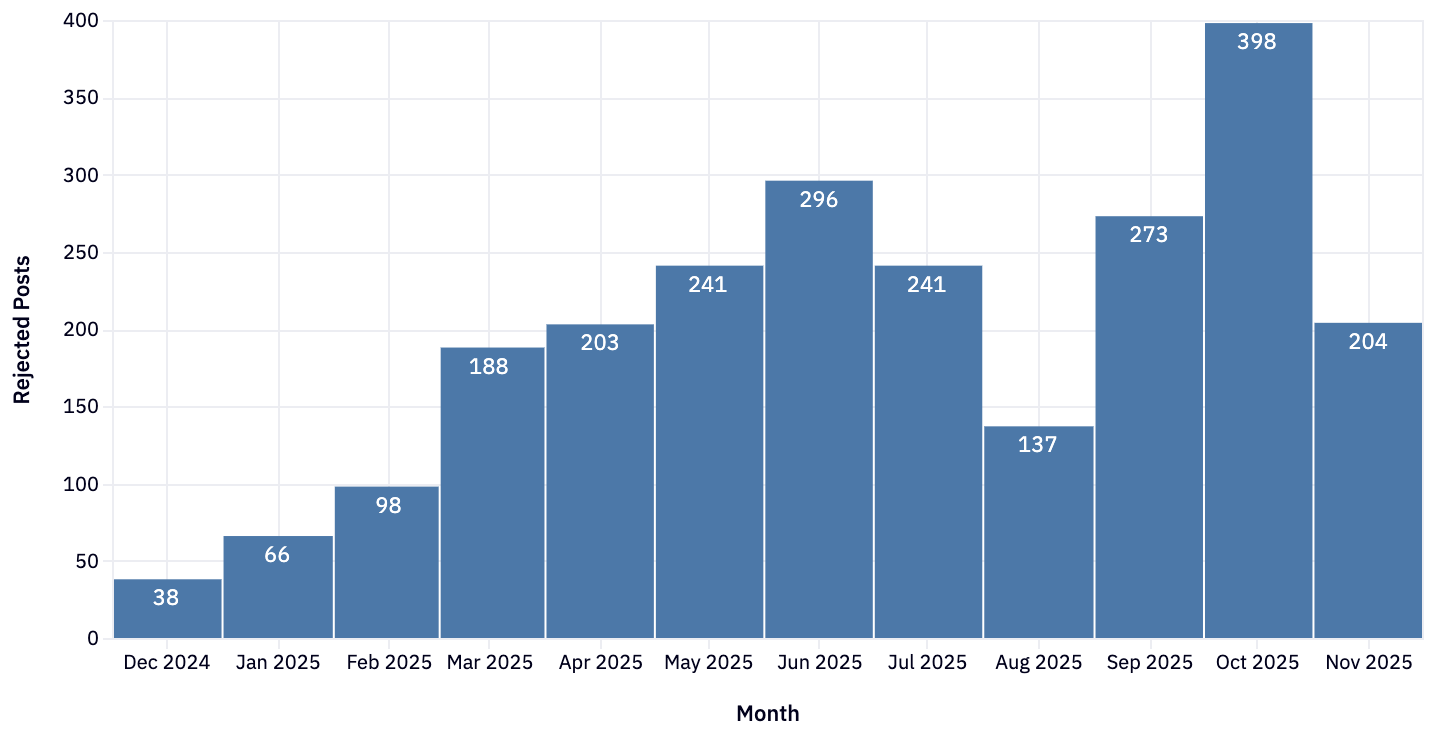

Rejected posts per month. That jump in March is the release of the super sycophantic GPT-4o. Thank you Sama!

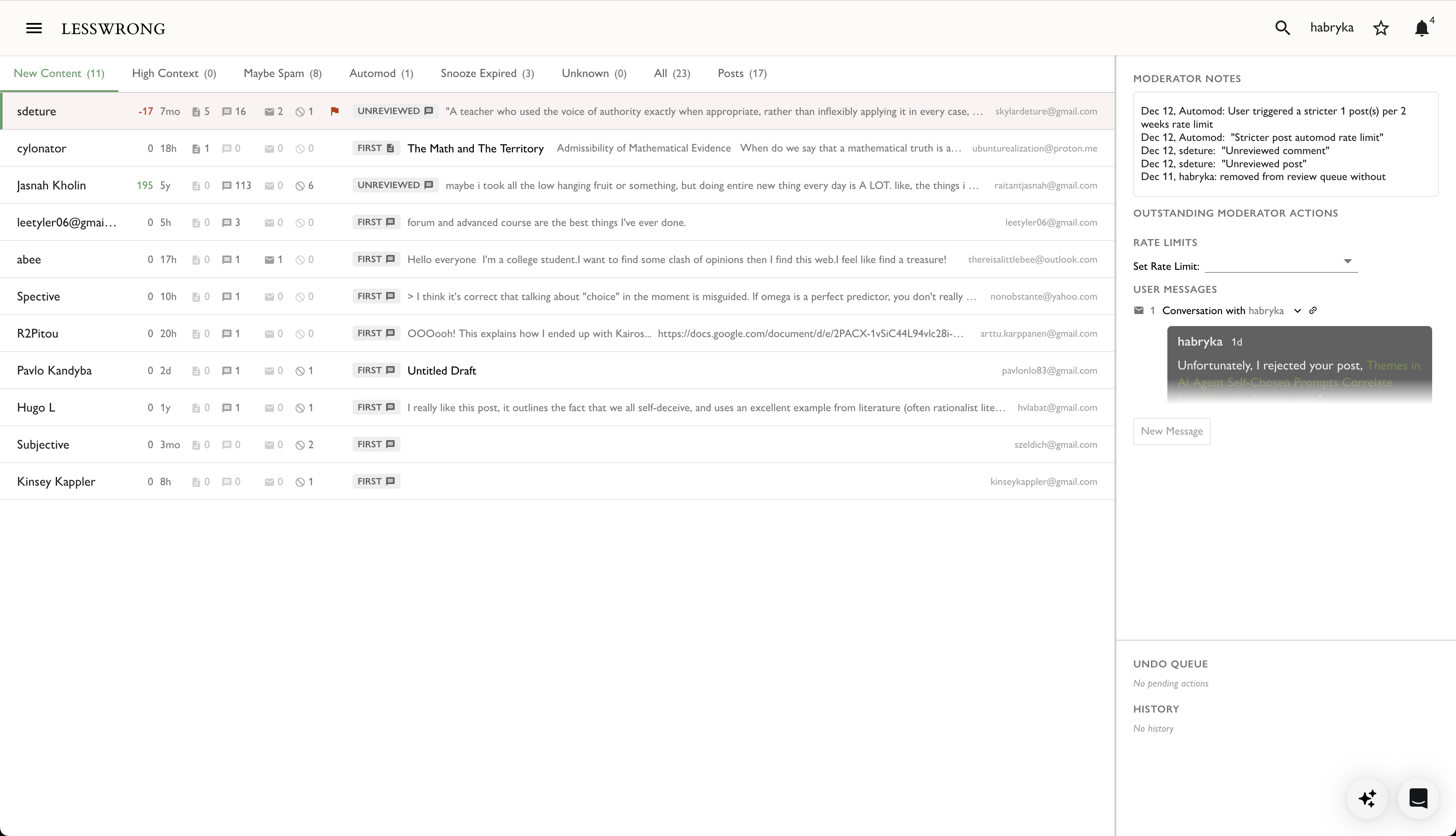

To make processing the volume of submissions feasible, we revamped our moderation UI, and iterated on automated AI-detection systems to reduce our workload:

The LessWrong admin moderation UI

The UI is modeled after Superhuman mail and has cut down our moderation processing time by something like 40%.

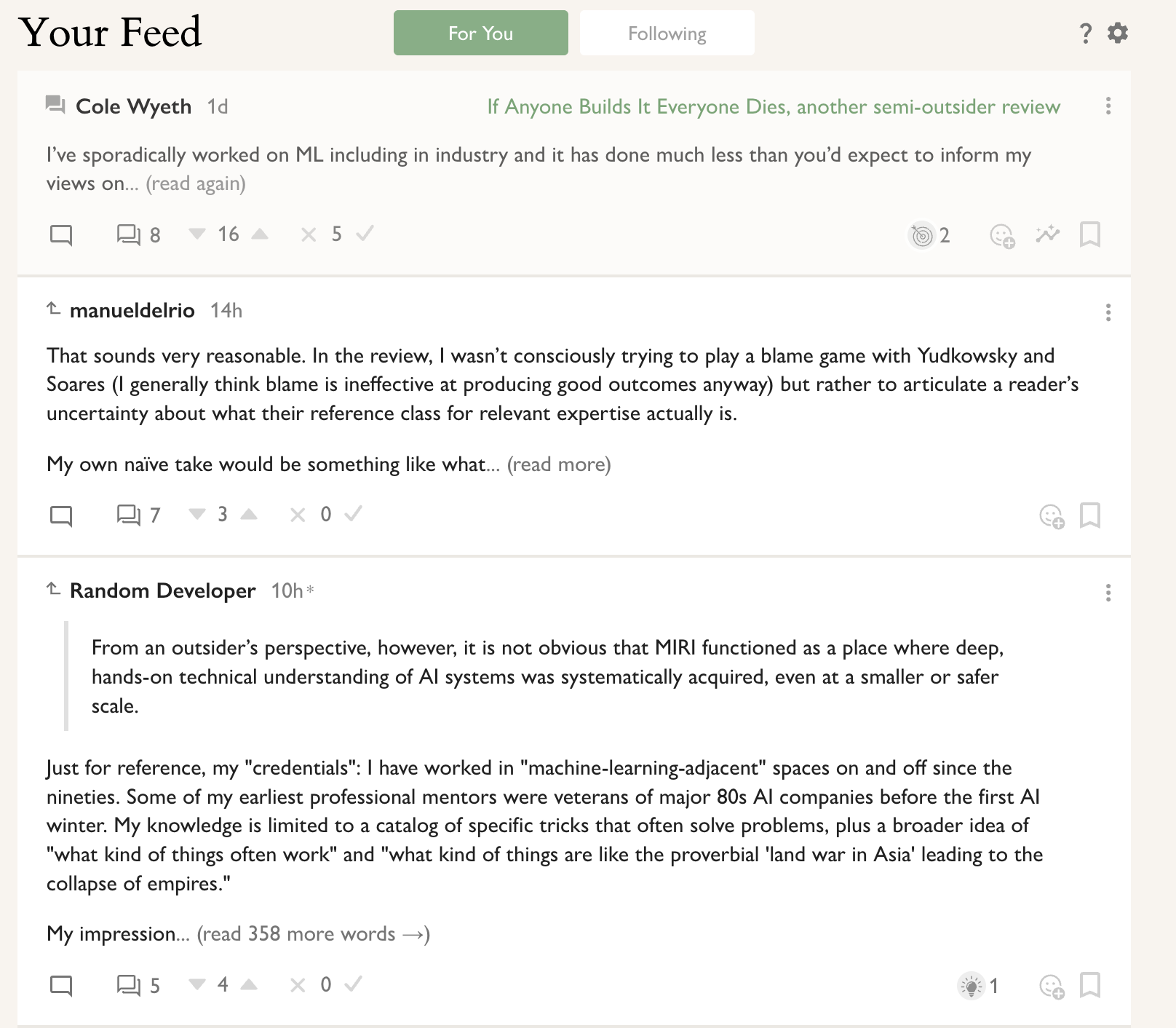

The LessWrong frontpage feed

The new feed section on the frontpage

As the volume of content on LessWrong increases, it stops being in the interest of approximately anyone to simply see an ordered list of all recent comments on the frontpage of the site. I spend ~2 hours a day reading LessWrong and even I felt like the recent discussion section was failing to show me what I wanted to see!

And so we've been working on a feed for the frontpage of LessWrong that somehow tries to figure out what you want to read, selecting things to show you from both the archives of LessWrong and the torrent of content that gets posted here every day.

I am not totally satisfied with where the feed is currently at. I think the current algorithm is kind of janky and ends up showing me too many old posts, and too little of the recent discussion on the site. It also turns out that it’s really not clear how to display deeply nested comment trees in a feed, especially if you already read various parts of the tree, and I still want to iterate more on how we solve those problems.

But overall, it’s clear to me we needed to make this jump at some point, and what we have now, despite its imperfections is a lot better than what we had previously.

Many UI improvements and bugfixes

And then we also just fixed and improved many small things all over LessWrong and fixed many many bugs. I really can't list them all here, but feel free to dig through the Github repository if you are curious.

Lighthaven

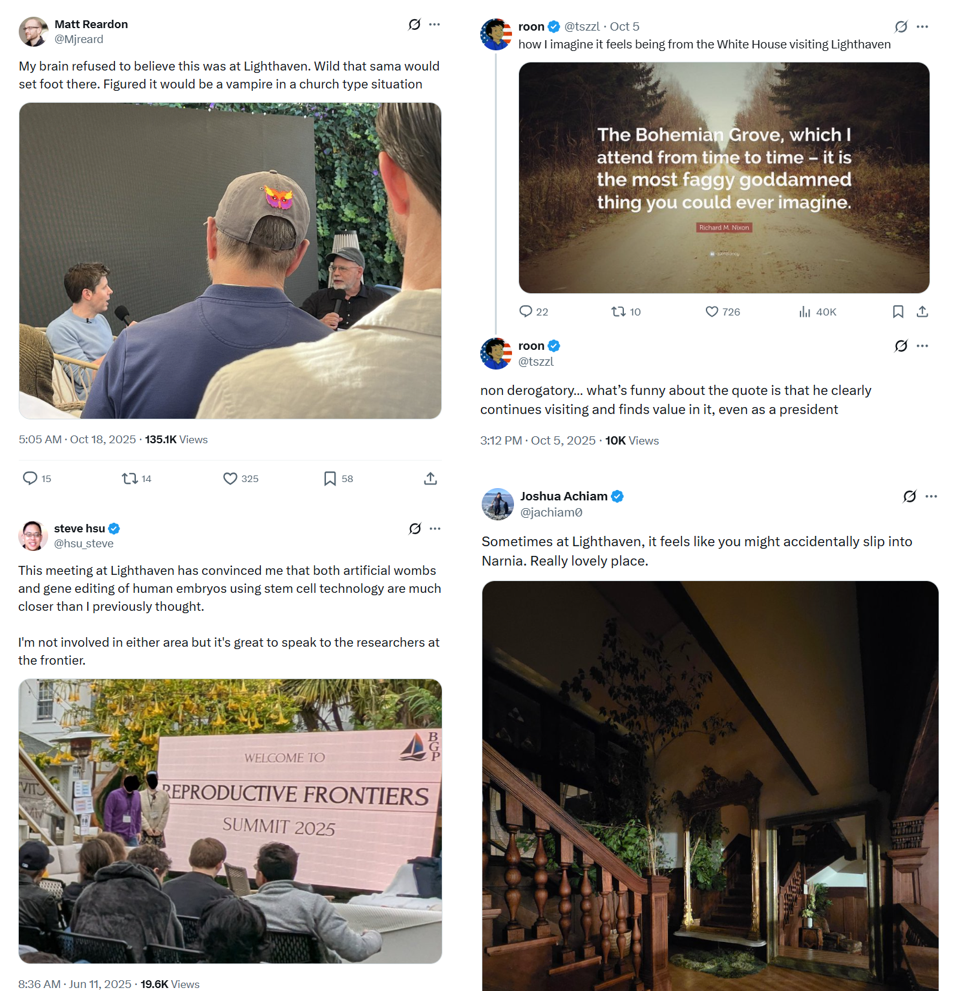

Now, the other big strand of our efforts is Lighthaven, our 30,000 sq. ft. campus in downtown Berkeley.

It's really hard to estimate the positive externalities from a space like Lighthaven. However, I do really think it is helping a lot of the people who will have a big effect on how humanity navigates this coming decade have better conversations, have saner beliefs, and have better allies than they would have otherwise had. When I think of the 1000 most important conversations that happened in the Bay Area in the last year, my guess is a solid 1% of those happened at Lighthaven during one of our events.

The people love Lighthaven. It is a place of wonder and beauty and discourse—and I think there is a decent chance that no other physical place in the world will have ended up contributing more to humanity's grappling with this weird and crazy future we are now facing.

And look, I want to be honest. Lighthaven was a truly crazy and weird and ambitious project to embark on. In as much as you are trying to evaluate whether Lightcone will do valuable and interesting things in the future, I think Lighthaven should be a huge positive update.

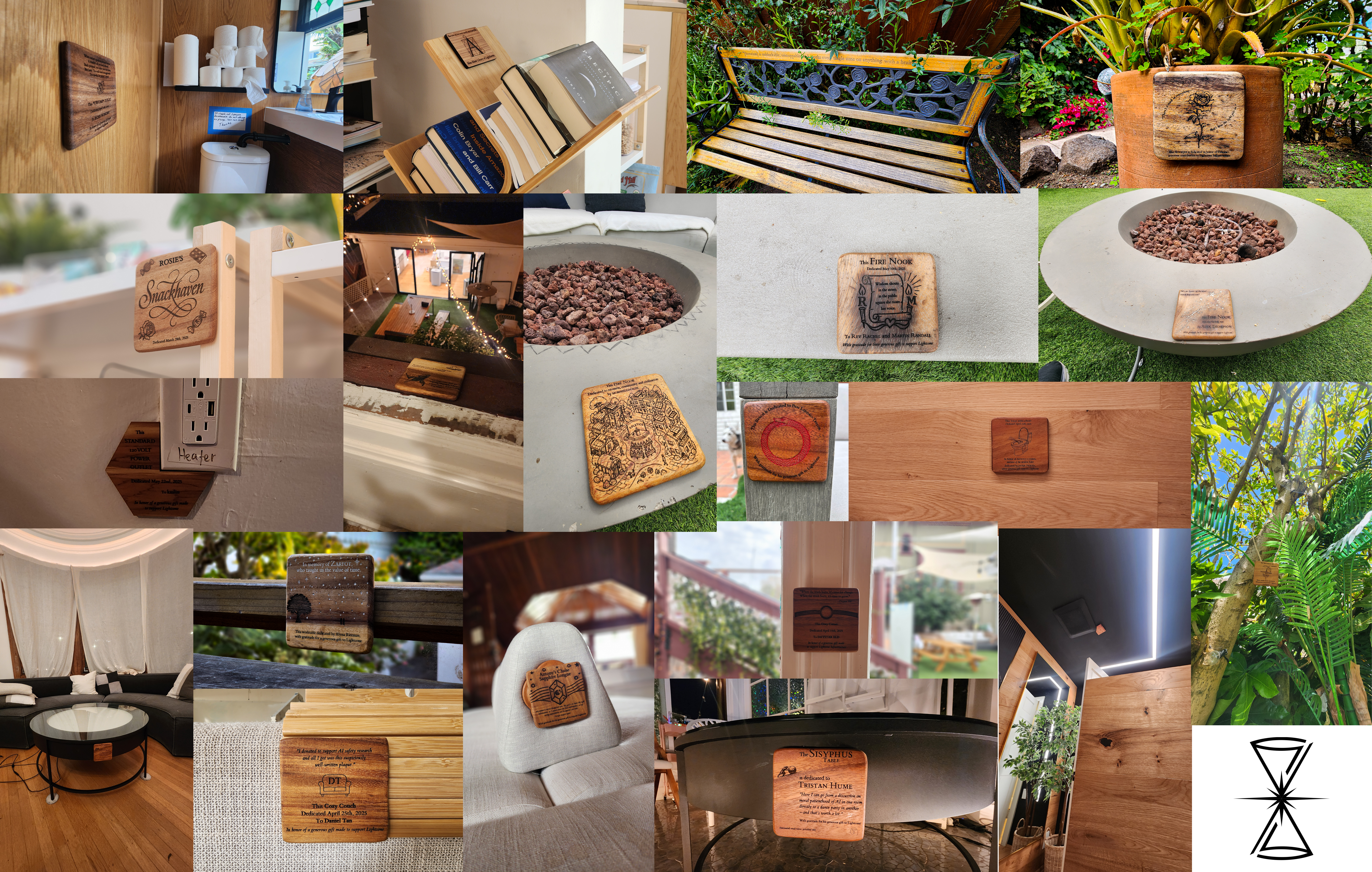

2025 has been the venue's busiest year to date. Here is a collage of many of the events we have hosted this year:

Here is a calendar view of our publicly viewable events (we also had a small number of private events):

https://lwartifacts.vercel.app/artifacts/lighthaven-calendar

Almost all of these Lighthaven events were put on by outside organizations, with Lightcone providing the venue and some ops support. However, Lightcone directly organized two events in 2025: LessOnline and Inkhaven.

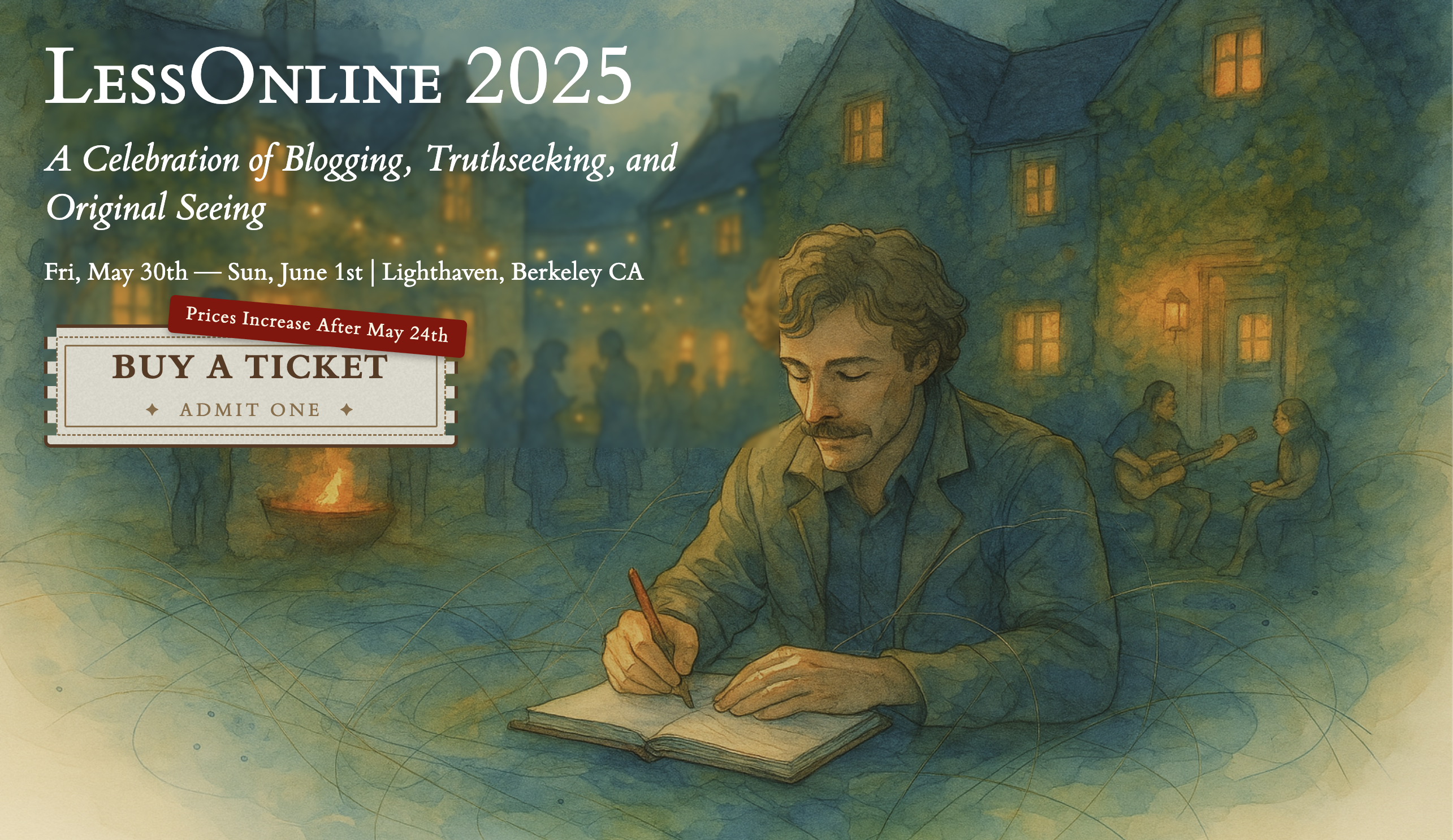

LessOnline

LessOnline: A Festival of Writers Who are Wrong on the Internet

We run an annual conference for the blogosphere named LessOnline. This year, over 600 people gathered together to celebrate being wrong on the internet. Many rationalist writers like Eliezer Yudkowsky, Scott Alexander, and Zvi Mowshowitz attend. Many rat-adjacent writers also love to come, including Patrick McKenzie, David Chapman, Michael Nielsen, Scott Sumner, and more. (...actually, the event caused Scott Aaronson to decide he's a rationalist now.)

People seem to be having a good time, leading activities/sessions, and meeting the blogosphere in person. Of the 360 people who filled out the feedback form, the average rating was 8.5, the NPS was 37, and the average rating for Lighthaven as a venue was 9.1—a good sign about the venue capacity, given that it was our biggest historical event!

The first year we ran it (2024), we made some basic mistakes and only just broke even on it. This year, we made a modest profit ($50k-$100k), and it also takes about half as much input from the core Lightcone team to organize, so we're in a good position to keep running this annually.

Some photos from this year's LessOnline—photo credit to Drethelin and Rudy!

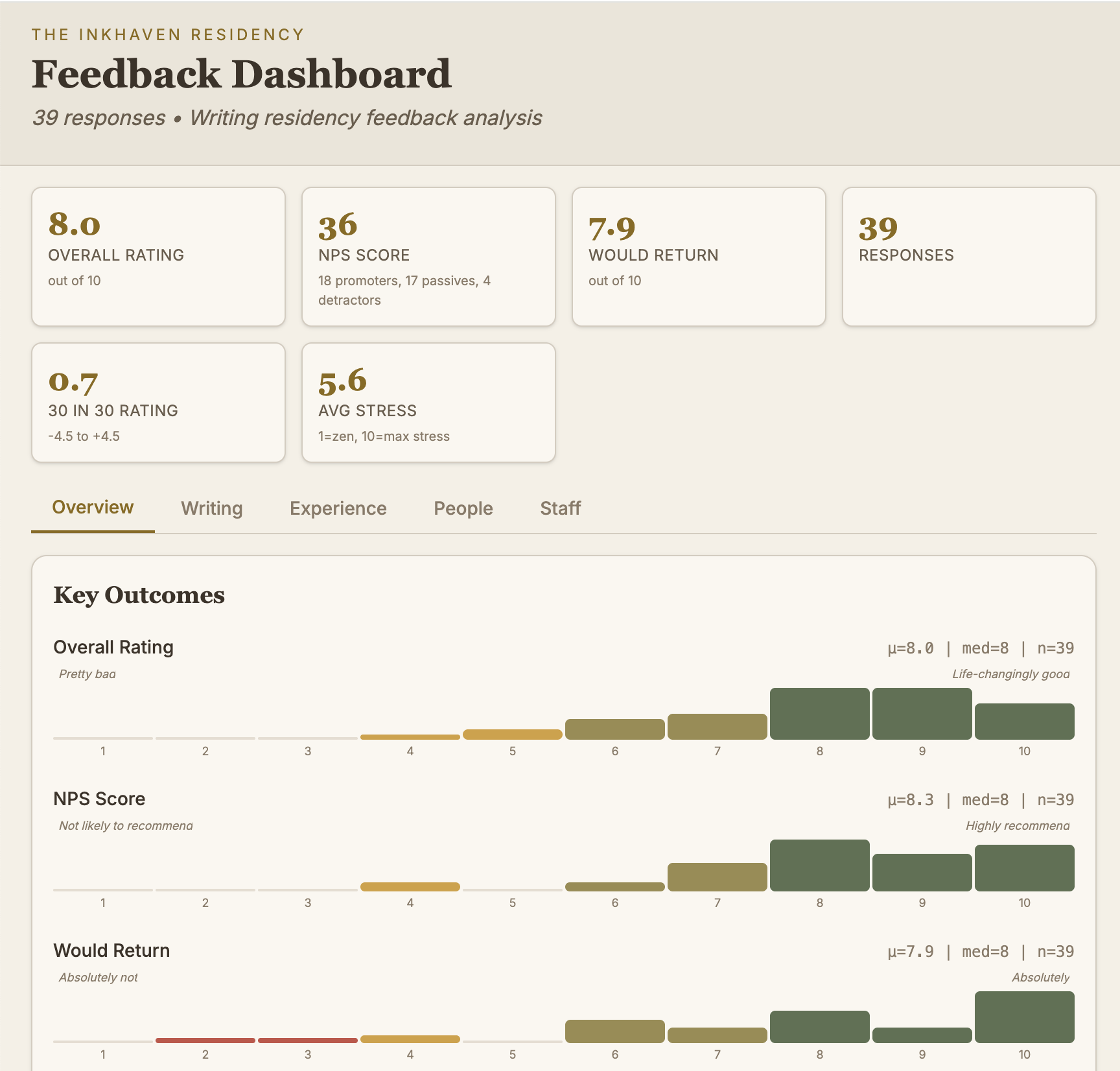

Inkhaven Residency

For the month of November, the internet came to Lighthaven. 41 great writers paid us to come gather at Lighthaven to write a blog post every day. The participants loved it, and even the Lightcone staff ended up publishing a bunch of IMO pretty great posts!

The program wasn't for everyone, but ratings are mostly high across the board, and the large majority would like to return when the opportunity presents itself. Another neat feature of a daily writing program is that a really huge number of participants wrote reflections on their experience, which really gives you a lot of surface area on how the program went:

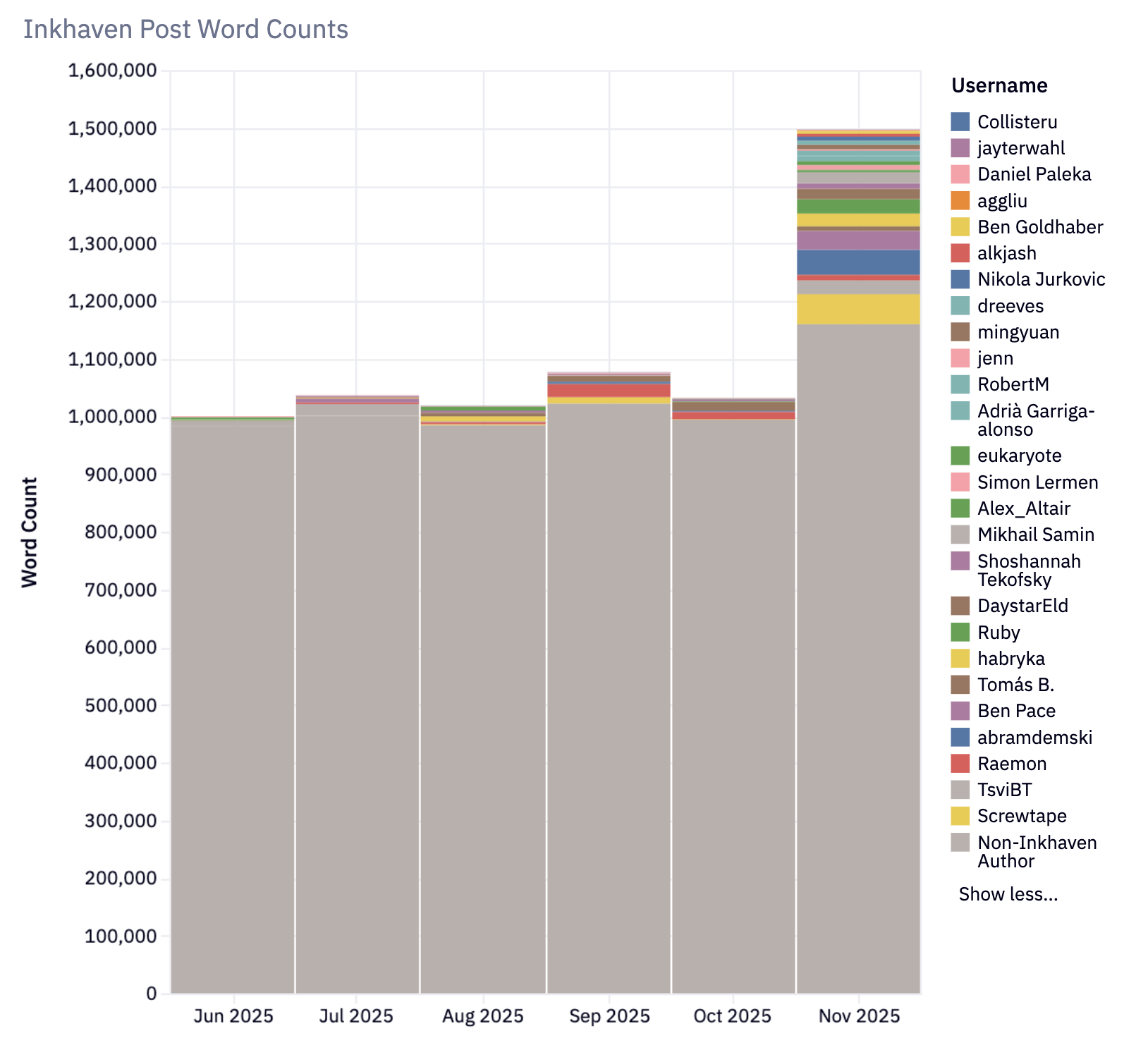

Inkhaven was the first program that really benefited from the fact that we run both lots of online publishing infrastructure, and a physical space like Lighthaven. The event ended up being both good for the world, and IMO also good for LessWrong.

Word counts of LessWrong posts, with Inkhaven participants marked in color.

Of course, by its nature of being a program where you are supposed to publish daily, not all of those posts were of the same quality as other LessWrong posts. However, my guess is their average quality was actually substantially higher than the average quality of non-Inkhaven posts. This is evidenced by their average karma, as well as a sampling of November's top 20 posts by karma[17].

Around 212 of the 748 posts in November 2026 were written by Inkhaven authors (almost 30%)! Those posts received an average of 50 karma, compared to 27.8 karma of non-Inkhaven posts. Also of the top 20 posts published by karma in November, 11 of them are Inkhaven posts (suggesting Inkhaven posts are ~2x as likely to end up in the top posts of the month compared to non-Inkhaven posts).

I also liked Garret Baker's thoughts on the effect of Inkhaven on LessWrong.

Inkhaven was very close to being economically self-sufficient[18]. Participants seemed generally happy with the price, and the program was a decently good fit for sponsorship, which allowed us to get quite close to breaking even with the labor and Lighthaven costs of the program.

Finances

And that brings us to our finances! If you are thinking about giving us money, you'd want to know where we are spending money and what we would do with additional funds. So let's get down to brass tacks.

https://lwartifacts.vercel.app/artifacts/2025-fundraiser-sankey

Some key highlights from 2025:

Lighthaven got pretty close to breaking even!

Most of our expenses are our core staff salaries

With additional funds from SFF and the EA Infrastructure Fund in the middle of this year, we were able to make our deferred 2024 interest payment and did not have to take out a loan to do that[19]

If anyone has any more detailed questions about anything, including our salary policies[20], please feel free to ask in the comments!

Lightcone and the funding ecosystem

An important (though often tricky to think about) question for deciding whether to donate to Lightcone is how that plays into other people funding us.

Much of what I said last year about the funding ecosystem is still true. There don't really exist large philanthropic foundations that can fund our work besides SFF, and SFF is funding us (though only partially). Open Philanthropy (now Coefficient Giving) appears to continue to have some kind of blacklist for recommending grants to us, FTX of course no longer exists, and there are currently no other large philanthropic institutions that fund the kind of work we are doing. Various grantmaking programs for AI safety research have been popping up (such as the UK's ARIA program, or grant programs by capability labs), but they can only fund research directly, not any kind of research infrastructure.

Eli Rose, who was in charge of evaluating us at Open Phil/Coefficient Giving when they were still able to grant to us, said this last year on our fundraiser:

I work as a grantmaker on the Global Catastrophic Risks Capacity-Building team at Open Philanthropy; a large part of our funding portfolio is aimed at increasing the human capital and knowledge base directed at AI safety. I previously worked on several of Open Phil’s grants to Lightcone.

As part of my team’s work, we spend a good deal of effort forming views about which interventions have or have not been important historically for the goals described in my first paragraph. I think LessWrong and the Alignment Forum have been strongly positive for these goals historically, and think they’ll likely continue to be at least into the medium term.

You can read the rest of his comment on the original post.

All of this continues to put Lightcone in a position that is uniquely dependent on non-institutional donors to fill our funding gap.

This situation honestly feels pretty crazy to me. Given how much of the broader ecosystem's funding flows through institutional funders, it seems kind of insane to have infrastructure as central as LessWrong and Lighthaven, and projects as transparently successful as AI 2027, get primarily funded by non-institutional donors, especially given that staff at places like Coefficient Giving and SFF and FLI think we are highly cost-effective. But as far as I know this is how it will be at least for 2026 (though we will certainly apply to whatever institutional funding programs are open to organizations like us, in as much as they exist)[21].

Sidebar on SFF Funding to Lightcone

Copying some context from last year's fundraiser:

This leaves the Survival and Flourishing Fund, who have continued to be a great funder to us. [...] But there are additional reasons why it's hard for us to rely on SFF funding:

Historically on the order of 50% of SFF recommenders are recused from recommending us money. SFF is quite strict about recusals, and we are friends with many of the people that tend to be recruited for this role. The way SFF is set up, this causes a substantial reduction in funding allocated to us (compared to the recommenders being fully drawn from the set of people who are not recused from recommending to us).

Jaan and SFC helped us fund the above-mentioned settlement with the FTX estate (providing $1.7M in funding). This was structured as a virtual "advance" against future potential donations, where Jaan expects to only donate 50% of future recommendations made to us via things like the SFF, until the other 50% add up to $1.29M in "garnished" funding. This means for the foreseeable future, our funding from the SFF is cut in half.

This is also the reason for why the SFF matching for this fundraiser is 12.5%. The matching rate we applied with was 25%, but due to the garnishing mentioned above, the actual rate comes out to 12.5%. On the plus side, if we fully meet our fundraising target this year, we will be very close to being able to receive 100% of recommendations from SFF in the future, which is a substantial additional incentive.

Lightcone's work on improving the funding landscape

Historically one of our big strains of work has been our efforts on improving the philanthropic funding landscape around AI existential risk and adjacent areas. I summarized our work in the space like this in last year's fundraiser:

The third big branch of historical Lightcone efforts has been to build the S-Process, a funding allocation mechanism used by SFF, FLI and Lightspeed Grants.

Together with the SFF, we built an app and set of algorithms that allows for coordinating a large number of independent grant evaluators and funders much more efficiently than anything I've seen before, and it has successfully been used to distribute over $100M in donations over the last 5 years. Internally I feel confident that we substantially increased the cost-effectiveness of how that funding was allocated—my best guess is on the order of doubling it, but more confidently by at least 20-30%[18], which I think alone is a huge amount of good done.[19]

Earlier this year, we also ran our own funding round owned end-to-end under the banner of "Lightspeed Grants":

Somewhat ironically, the biggest bottleneck to us working on funding infrastructure has been funding for ourselves. Working on infrastructure that funds ourselves seems ripe with potential concerns about corruption and bad incentives, and so I have not felt comfortable applying for funding from a program like Lightspeed Grants ourselves. Our non-SFF funders historically were also less enthusiastic about us working on funding infrastructure for the broader ecosystem than our other projects.

We did not do as much work this year on improving the funding landscape as we have done in previous years, largely owing to lack of funding for development of that kind of infrastructure!

I have however continued to be an active fund manager on the Long Term Future Fund, which has continued to make a substantial number of high-quality grants over the course of the year, and we have picked up one important strain of work that I am hoping to develop more in 2026.

The AI Risk Mitigation Fund (ARM Fund) was founded in 2024 by members of the Long Term Future Fund, as a fund more specifically dedicated to reducing catastrophic risk from AI. This year the ARM Fund moved from being a project fiscally sponsored by Emergent Ventures to becoming a program of Lightcone Infrastructure (but still under substantial leadership from Caleb Parikh).

Our exact plans with the ARM Fund are up in the air, but you can read more about them in our "Plans for 2026" section.

Lightcone as a fiscal sponsor

Another thing we have started doing this year is to help a few organizations and projects that we think are particularly valuable by providing them with fiscal sponsorship as part of our 501(c)3 status, and access to some of our internal infrastructure for accounting and financial automations.

We largely did this because it seemed like an easy win, and it's not a part we are looking to scale up, but I figured I would still mention it. We are currently providing fiscal sponsorship for:

Adam Scholl and Aysja Johnson's research

John Wentworth and David Lorell's research

Charitable research by Elizabeth Van Nostrand

Rationality Meetup organizing by Skyler Crossman

LessWrong Deutschland for meetup organizing

Fiscal sponsors usually take between 6% and 12% of revenue as a sponsorship fee. We mostly don't charge fees[22] and are probably a better fiscal sponsor than alternatives. We have received around $1M in donations for the above projects in 2025, so if you think the above projects are cost-effective, a reasonable estimate is that we generated something like $40k to $120k in value via doing so.

If it's worth doing, it's worth doing with made up statistics

Thus is it written: “It’s easy to lie with statistics, but it’s easier to lie without them.”

Last year, I did a number of rough back of the envelope estimates to estimate how much impact we produced with our work. Their basic logic still seems valid to me and you can read them in the collapsible section below, but I will also add some new ones for work we did in 2025.

Cost-Effectiveness Estimates for 2025 work

It's tricky to evaluate our work on communicating to the public in terms of cost-effectiveness. It's easy to measure salience and reach, but it's much harder to measure whether the ideas we propagated made things better or worse.

So I will focus here on the former, making what seem to me relatively conservative assumptions about the latter, but assuming that the ideas we propagated ultimately will improve the world instead of making it worse, which I definitely do not think is obvious!

For LessWrong in particular, I think last year's estimates are still pretty applicable, and given the increase in traffic and activity, you can basically multiply the numbers for those estimates by roughly 1.5x, and since nothing else really changed, I won't repeat the analysis here.

AI 2027

AI 2027 focused on things that matter, and there are few things that I would consider more valuable for someone to read than AI 2027. It also made an enormous splash in the world.

Indeed, the traffic for AI 2027 in 2025 has been similar to the traffic for all of LessWrong in 2025, with about 5M unique users and 10M page-views, whereas LessWrong had 4.2M unique users and 22M page-views.

Comparing to paid advertisements

A reference class that seems worth comparing against is how much it would have cost to drive this much traffic to AI 2027 using advertisement providers like Google AdSense. Ad-spend is an interesting comparison because in some sense driving attention to something via paid advertisements is the obvious way of converting money into attention, and how much of global attention gets allocated.

Cost per click tends to be around $2 per click for the demographics that AI 2027 is aimed at, so driving 10M pageviews to AI 2027 using classical online advertising would have cost you on the order of $20M, which of course would pretty easily fully fund both Lightcone's and the AI Futures team's budgets.

Another related reference class is how much money AI 2027 would have generated if we simply had put a Google AdSense ad on it. Historically much of journalism and the attention economy has been driven by advertisement revenue.

Google AdSense ads tend to generate around $2 per 1,000 views, so you would end up with around $20,000. If you fully count secondary media impressions as well (i.e. count views for AI 2027-based YouTube videos and news articles), you would end up at more like $200,000. This is not nothing, but it's a far cry from what the project actually cost (roughly $750k across Lightcone and the AI Futures team).

Comparison to think tank budgets

Consider a hypothetical think tank with a budget on the order of $100M. If the only thing of significance that they ended up doing in the last few years of operations was release AI 2027, I think funding them would have been a cost-effective donation opportunity.

For example, CSET was funded for around $100M by Open Philanthropy/Good Ventures. I would trade all public outputs from CSET in exchange for the reach and splash that AI 2027 made.

And I do think some of CSET's work has been quite impactful and valuable! Their work on the semiconductor supply chain did raise a lot of important considerations to policymakers. Of course, it's harder to quantify the other benefits of a program like CSET, which e.g. included allowing Jason Matheny to become head of RAND, which has an annual budget of around $500M (though of course only a very small fraction of that budget goes to projects I would consider even remote candidates for being highly cost-effective). But I still find it a valuable comparison.

Comparison to 80,000 Hours

80,000 Hours has an annual budget of around $15M. The primary output of 80k is online content about effective career choice and cause prioritization. They use engagement-hours as the primary metric in their own cost-effectiveness estimates. Their latest impact report cites around 578,733 engagement hours.

Meanwhile, AI-2027.com has had 745,423 engagement hours in 2025 so far, and by the end of the year will have likely produced 50% more engagement hours than all of 80k's content in 2024.

80k's latest report goes through June 2025. A large portion of 80k's engagement hours in this report were from their AI 2027 video.

This suggests that 80k themselves considers engagement with the AI 2027 content to be competitive with engagement with their other material. If you were to straightforwardly extrapolate 80k's impact from their engagement hours, this would suggest a substantial fraction of 80k's total impact for 2025, as measured by their own metrics, will be downstream of AI 2027.

This naively suggests that if you think 80k was a cost-effective donation target, then it would make sense to fund the development and writing of AI 2027 at least to the tune of $15M (though again, this kind of estimate is at most a rough sanity-check on cost-effectiveness estimates and shouldn't be taken at face value). By my previous estimate of Lightcone being responsible for roughly 30% of the impact of the project, this would justify our full budget 2-3 times over.

Lighthaven in 2025

A conservative estimate of the value we provide is to assume that Lightcone is splitting the surplus generated by Lighthaven 50/50 with our clients, as that split was roughly what we were aiming for when we set our event pricing baselines in 2024 (see last year's estimates for more detail on that):

We project ending 2025 with a total of ~$3.1M in event revenue. My algorithm for splitting the marginal benefit 50/50 with our clients suggests that as long as Lighthaven costs less than ~$4.12M (which it did), it should be worth funding if you thought it was worth funding the organizations that have hosted events and programs here.

I estimate that Lighthaven will generate $100k–$200k more revenue in 2026 compared to in 2025.[25]

What other people say about funding us

Independent evaluation efforts are rare in the AI safety space. People defer too much to fads, or popularity, or large institutions on which projects are worth funding. But ultimately, it is really hard to fully independently evaluate a project like Lightcone yourself, so here is a collection of things I could find that people have said about our cost-effectiveness.

Zvi Mowshowitz

Lightcone Infrastructure is my current top pick across all categories. If you asked me where to give a dollar, or quite a few dollars, to someone who is not me, I would tell you to fund Lightcone Infrastructure.

Focus: Rationality community infrastructure, LessWrong, the Alignment Forum and Lighthaven.

Leaders: Oliver Habryka and Rafe Kennedy

Funding Needed: High

Confidence Level: High

Disclaimer: I am on the CFAR board which used to be the umbrella organization for Lightcone and still has some lingering ties. My writing appears on LessWrong. I have long time relationships with everyone involved. I have been to several reliably great workshops or conferences at their campus at Lighthaven. So I am conflicted here.

With that said, Lightcone is my clear number one. I think they are doing great work, both in terms of LessWrong and also Lighthaven. There is the potential, with greater funding, to enrich both of these tasks, and also for expansion.

There is a large force multiplier here (although that is true of a number of other organizations I list as well).

They made their 2024 fundraising pitch here, I encourage reading it.

Where I am beyond confident is that if LessWrong, the Alignment Forum or the venue Lighthaven were unable to continue, any one of these would be a major, quite bad unforced error.

LessWrong and the Alignment Forum a central part of the infrastructure of the meaningful internet.

Lighthaven is miles and miles away the best event venue I have ever seen. I do not know how to convey how much the design contributes to having a valuable conference, designed to facilitate the best kinds of conversations via a wide array of nooks and pathways designed with the principles of Christopher Alexander. This contributes to and takes advantage of the consistently fantastic set of people I encounter there.

The marginal costs here are large (~$3 million per year, some of which is made up by venue revenue), but the impact here is many times that, and I believe they can take on more than ten times that amount and generate excellent returns.

If we can go beyond short term funding needs, they can pay off the mortgage to secure a buffer, and buy up surrounding buildings to secure against neighbors (who can, given this is Berkeley, cause a lot of trouble) and to secure more housing and other space. This would secure the future of the space.

I would love to see them then expand into additional spaces. They note this would also require the right people.

Donate through every.org, or contact team@lesswrong.com.

Emmett Shear

70% epistemic confidence: People will talk about Lighthaven in Berkeley in the future the same way they talk about IAS at Princeton or Bell Labs.

[...]

I'm donating $50k to support Lightcone Infrastructure. Has to be in the running as the most effective pro-humanity pro-future infrastructure in the Bay Area.

Eli Rose from Open Philanthropy/Coefficient Giving

I work as a grantmaker on the Global Catastrophic Risks Capacity-Building team at Open Philanthropy; a large part of our funding portfolio is aimed at increasing the human capital and knowledge base directed at AI safety. I previously worked on several of Open Phil’s grants to Lightcone.

As part of my team’s work, we spend a good deal of effort forming views about which interventions have or have not been important historically for the goals described in my first paragraph. I think LessWrong and the Alignment Forum have been strongly positive for these goals historically, and think they’ll likely continue to be at least into the medium term.

Mikhail Samin (recommending against giving to Lightcone Infrastructure)

In short:

The resources are and can be used in ways the community wouldn’t endorse; I know people who regret their donations, given the newer knowledge of the policies of the organization.

The org is run by Oliver Habryka, who puts personal conflicts above shared goals, and is fine with being the kind of agent others regret having dealt with.

(In my opinion, the LessWrong community has somewhat better norms, design taste, and standards than Lightcone Infrastructure.)

The cost of running/supporting LessWrong is much lower than Lightcone Infrastructure’s spending.

Michael Dickens

Lightcone runs LessWrong and an office that Lightcone calls "Bell Labs for longtermism".

Lightcone has a detailed case for impact on Manifund. In short, Lightcone maintains LessWrong, and LessWrong is upstream of a large quantity of AI safety work.

I believe Lightcone has high expected value and it can make good use of marginal donations.

By maintaining LessWrong, Lightcone somewhat improves many AI safety efforts (plus efforts on other beneficial projects that don't relate to AI safety). If I were very uncertain about what sort of work was best, I might donate to Lightcone as a way to provide diffuse benefits across many areas. But since I believe (a specific sort of) policy work has much higher EV than AI safety research, I believe it makes more sense to fund that policy work directly.

An illustration with some made-up numbers: Suppose that

There are 10 categories of AI safety work.

Lightcone makes each of them 20% better.

The average AI safety work produces 1 utility point.

Well-directed AI policy produces 5 utility points.

Then a donation to Lightcone is worth 2 utility points, and my favorite AI policy orgs are worth 5 points. So a donation to Lightcone is better than the average AI safety org, but not as good as good policy orgs.

Daniel Kokotajlo

My wife and I just donated $10k, and will probably donate substantially more once we have more funds available.

LW 1.0 was how I heard about and became interested in AGI, x-risk, effective altruism, and a bunch of other important ideas. LW 2.0 was the place where I learned to think seriously about those topics & got feedback from others on my thoughts. (I tried to discuss all this stuff with grad students at professors at UNC, where I was studying philosophy, with only limited success). Importantly, LW 2.0 was a place where I could write up my ideas in blog post or comment form, and then get fast feedback on them (by contrast with academic philosophy where I did manage to write on these topics but it took 10x longer per paper to write and then years to get published and then additional years to get replies from people I didn't already know). More generally the rationalist community that Lightcone has kept alive, and then built, is... well, it's hard to quantify how much I'd pay now to retroactively cause all that stuff to happen, but it's way more than $10k, even if we just focus on the small slice of it that benefitted me personally.

Looking forward, I expect a diminished role, due simply to AGI being a more popular topic these days so there are lots of other places to talk and think about it. In other words the effects of LW 2.0 and Lightcone more generally are now (large) drops in a bucket whereas before they were large drops in an eye-dropper. However, I still think Lightcone is one of the best bang-for-buck places to donate to from an altruistic perspective. The OP lists several examples of important people reading and being influenced by LW; I personally know of several more.

...All of the above was just about magnitude of impact, rather than direction. (Though positive direction was implied). So now I turn to the question of whether Lightcone is consistently a force for good in the world vs. e.g. a force for evil or a high-variance force for chaos.

Because of cluelessness, it's hard to say how things will shake out in the long run. For example, I wouldn't be surprised if the #1 determinant of how things go for humanity is whether the powerful people (POTUS & advisors & maybe congress and judiciary) take AGI misalignment and x-risk seriously when AGI is imminent. And I wouldn't be surprised if the #1 determinant of that is the messenger -- which voices are most prominently associated with these ideas? Esteemed professors like Hinton and Bengio, or nerdy weirdos like many of us here? On this model, perhaps all the good Lightcone has done is outweighed by this unfortunate set of facts, and it would have been better if this website never existed.

[...]

On the virtue side, in my experience Lightcone seems to have high standards for epistemic rationality and for integrity & honesty. Perhaps the highest, in fact, in this space. Overall I'm impressed with them and expect them to be consistently and transparently a force for good. Insofar as bad things result from their actions I expect it to be because of second-order effects like the status/association thing I mentioned above, rather than because of bad behavior on their part.

So yeah. It's not the only thing we'll be donating to, but it's in our top tier.

Jacob Pfau

I am slightly worried about the rate at which LW is shipping new features. I'm not convinced they are net positive. I see lesswrong as a clear success, but unclear user of the marginal dollar; I see lighthaven as a moderate success and very likely positive to expand at the margin.

The interface has been getting busier[1] whereas I think the modal reader would benefit from having as few distractions as possible while reading. I don't think an LLM-enhanced editor would be useful, nor am I excited about additional tutoring functionality.

I am glad to see that people are donating, but I would have preferred this post to carefully signpost the difference between status-quo value of LW (immense) from the marginal value of paying for more features for LW (possibly negative), and from your other enterprises. Probably not worth the trouble, but is it possible to unbundle these for the purposes of donations?

Separately, thank you to the team! My research experience over the past years has benefitted from LW on a daily basis.

EDIT: thanks to Habryka for more details. After comparing to previous site versions I'm more optimistic about the prospects for active work on LW.

Ryan Greenblatt

I donated $3,000[26]. I've gained and will continue to gain a huge amount of value from LW and other activities of Lightcone Infrastructure, so it seemed like a cooperative and virtuous move to donate.

I tried to donate at a level such that if all people using LW followed a similar policy to me, Lightcone would be likely be reasonably funded, at least for the LW component.

I think marginal funding to Lightcone Infrastructure beyond the ~$3 million needed to avoid substantial downsizing is probably worse than some other funding opportunities. So, while I typically donate larger amounts to a smaller number of things, I'm not sure if I will donate a large amount to Lightcone yet. You should interpret my $3,000 donation as indicating "this is a pretty good donation opportunity and I think there are general cooperativeness reasons to donate" rather than something stronger.

CowardlyPersonUsingPseudonym

Have you considered cutting salaries in half? According to the table you share in the comments, you spend 1.4 million on the salary for the 6 of you, which is $230k per person. If the org was in a better shape, I would consider this a reasonable salary, but I feel that if I was in the situation you guys are in, I would request my salary to be at least halved.

Relatedly, I don't know if it's possible for you to run with fewer employees than you currently have. I can imagine that 6 people is the minimum that is necessary to run this org, but I had the impression that at least one of you is working on creating new rationality and cognitive trainings, which might be nice in the long-term (though I'm pretty skeptical of the project altogether), but I would guess you don't have the slack for this kind of thing now if you are struggling for survival.

On the other side of the coin, can you extract more money out of your customers? The negotiation strategy you describe in the post (50-50ing the surplus) is very nice and gentlemanly, and makes sense if you are both making profit. But if there is a real chance of Lightcone going bankrupt and needing to sell Lighthaven, then your regular customers would need to fall back to their second best option, losing all their surplus. So I think in this situation it would be reasonable to try to charge your regular costumers practically the maximum they are willing to pay.

Sam Marks

I've donated $5,000. As with Ryan Greenblatt's donation, this is largely coming from a place of cooperativeness: I've gotten quite a lot of value from Lesswrong and Lighthaven.

IMO the strongest argument—which I'm still weighing—that I should donate more for altrustic reasons comes from the fact that quite a number of influential people seem to read content hosted on Lesswrong, and this might lead to them making better decisions. A related anecdote: When David Bau (big name professor in AI interpretability) gives young students a first intro to interpretability, his go-to example is (sometimes at least) nostalgebraist's logit lens. Watching David pull up a Lesswrong post on his computer and excitedly talk students through it was definitely surreal and entertaining.

When I was trying to assess the value I've gotten from Lightcone, I was having a hard time at first converting non-monetary value into dollars. But then I realized that there was an easy way to eyeball a monetary lower-bound for value provided me by Lightcone: estimate the effect that Lesswrong had on my personal income. Given that Lesswrong probably gets a good chunk of the credit for my career transition from academic math into AI safety research, that's a lot of dollars!

Jacob Czynski

I see much more value in Lighthaven than in the rest of the activity of Lightcone.

I wish Lightcone would split into two (or even three) organizations, as I would unequivocally endorse donating to Lighthaven and recommend it to others, vs. LessWrong where I'm not at all confident it's net positive over blogs and Substacks, and the grantmaking infastructure and other meta which is highly uncertain and probably highly replaceable.

All of the analysis of the impact of new LessWrong is misleading at best; it is assuming that volume on LessWrong is good in itself, which I do not believe to be the case; if similar volume is being stolen from other places, e.g. dropping away from blogs on the SSC blogroll and failing to create their own Substacks, which I think is very likely to be true, this is of minimal benefit to the community and likely negative benefit to the world, as LW is less visible and influential than strong existing blogs or well-written new Substacks.

That's on top of my long-standing objections to the structure of LW, which is bad for community epistemics by encouraging groupthink, in a way that standard blogs are not. If you agree with my contention there, then even a large net increase in volume would still be, in expectation, significantly negative for the community and the world. Weighted voting delenda est; post-author moderation delenda est; in order to win the war of good group epistemics we must accept losing the battles of discouraging some marginal posts from the prospect of harsh, rude, and/or ignorant feedback.

Benjamin Todd

Some more concrete ideas that stand out to me as worth thinking about are as follows (in no particular order):

[...]

Lightcone. LessWrong seems to have been cost-effective at movement building, and the Lightcone conference space also seems useful, though it’s more sensitive to your assessment of the value of Bay Area rationality community building.

[...]

I’m not making a blanket recommendation to fund these organisations, but they seem worthy of consideration, and also hopefully illustrate a rough lower bound for what you could do with $10m of marginal funds. With some work, you can probably find stuff that’s even better.

If you know of any other recent public evaluations of our cost-effectiveness, post them in the comments! I tried to be thorough in listing everything I could find that takes any kind of stance on the value of donating to Lightcone.

The Lightcone Principles

A key consideration for deciding whether Lightcone is worth supporting, is to understand what kind of principles determine Lightcone's priorities and operational decisions.

Conveniently, as part of Inkhaven, I have just published a sequence of internal memos that try to illustrate our core operational principles. The sequence is not finished yet, but the essays released so far are:

"Two can keep a secret if one is dead. So please share everything with at least one person"

"Tell people as early as possible it's not going to work out"

"Put numbers on stuff, all the time, otherwise scope insensitivity will eat you"

For another (somewhat more outdated) look into our operational philosophy, you can read this collection of short stories that Aaron Silverbook wrote about us: "The Adventures of the Lightcone Team".

Most importantly, we value flexibility and the ability to pivot extremely highly. This has historically meant that we grow very slowly and put a huge premium on wide generalist skillsets in the people we hire.

This paid off a lot this year, where we (probably) ended up having a lot of our impact by working on things like AI 2027, the MIRI book, and Deciding To Win. It's the philosophy that allowed us to build Lighthaven even though we had largely been a team of web-developers before, and build out much of the S-Process and the Survival and Flourishing Fund the years before that.

Plans for 2026

What we will do next year will depend a lot on how much funding we can raise.

The very minimum with which we can survive at all is roughly $1.4M. If we raise that much but not more, we will figure out how to take out a mortgage on the one property we own next to Lighthaven. This will increase our annual burn rate by around $70k/year in interest payments, will cost us a few staff weeks to get arranged, and will add a bunch of constraints to how we can use that property which will probably reduce Lighthaven income by another $30k–$50k a year in expectation.

If we fundraise less than $1.4M (or at least fail to get reasonably high confidence commitments that we will get more money before we run out of funds), I expect we will shut down. We will start the process of selling Lighthaven. I will make sure that LessWrong.com content somehow ends up in a fine place, but I don't expect I would be up for running it on a much smaller budget in the long run.

$2M is roughly the "we will continue business as usual and will probably do similarly cool things to what we've done in the past" threshold. In addition to continuing all of our existing development of LessWrong, Lighthaven, and collaborations with the AI Futures team and MIRI, this amount will likely include at least one additional major project and 1–2 additional hires to handle that increased workload.

I do have a lot of things I would like to invest in. While I don't expect to make a lot of core hires to our organization, I would like to trial a bunch of people. I see a lot of ways we could substantially increase our impact by spending more money and paying various contractors to expand our range of motion, and of course given that at this present moment we have less than a month of runway in the bank, it would really be very valuable for us to have more runway so we don't immediately drop dead in exactly 12 months.

Here are some projects that are at the top of my list of things we are likely to work on with more funds:

LessWrong LLM tools

We did an exploratory ~2 month sprint in late 2024 on figuring out how to use language models as first class tools on LessWrong (and for intellectual progress more broadly). While we found a few cool applications (I continue to use the "base model" autocomplete tool that we built for admins in the LessWrong editor regularly whenever I write a long post in order to get me unstuck on what to say next), it seemed to me that making something actually great with the models at the time would take a huge amount of work that was likely going to be obsolete within a year.

But it's been more than a year, and the models are really goddamn smart now, and I can think of very few people who are better placed to invest in LLM-thinking tools than we are. The LessWrong community is uniquely open to getting value out of modern AI systems, we have a lot of early-adopter types who are willing to learn new tools, and we have almost a decade of experience building tools for collective thought here on LessWrong.

Figure out how to deploy money at scale to make things better

I currently think it's around 35% likely that Anthropic will IPO in 2026. Even if it doesn't, I expect Anthropic will have some kind of major buy-back event in 2026.

If an IPO happens at Anthropic's ~$300B valuation, together with Anthropic's charitable 3:1 matching program for donations from early employees, this seems like it would result in by far the biggest philanthropic scale-up in history. It would not surprise me if Anthropic employees will collectively be trying to distribute over $5B/yr by end of 2026, and over $20B/yr by end of 2027. This alone would end up being on the order of ~5% of current total philanthropic spending in the US[27].

OpenAI employees will likely be less aggressive in their philanthropic spending, but my guess is we will also see an enormous scale up of capital deployed by people who have at least some substantial interest in reducing existential risk and shaping the long-run future of humanity.

Lightcone has been working on grant-making infrastructure for 7+ years, which puts us in a good position to build something here. We also have a relatively adversarial stance toward the labs, making us not a great fit to distribute all of this capability labs money. Nevertheless, I am not seeing anyone else picking up the ball here who I expect to do a good job.

As part of our early efforts in the space, the ARM Fund (airiskfund.com) has recently become a Lightcone sponsored project, and we will be investing staff time to scale it up and make it better. In particular, I am excited about creating infrastructure for many funders to coordinate their giving, negotiate fair funding shares for different projects, and create consistent deal-flow where promising projects can be put in front of the eyes of potential funders, and any advisors they want to defer to. The S-Process infrastructure we built between 2019 and 2024 has taught us a lot of valuable lessons about what works and doesn't work in the space.

More and more ambitious public communication about AI Existential risk

We will be working with the AI Futures team on the sequel to AI 2027, but there are many more opportunities for high quality public communications about ASI strategy and risk.

For example, it's pretty plausible we will want to expand directly into video production. IMO the 80k YouTube team has been a big success, and there is a lot more demand for producing high quality video content on AI existential risk and related topics.

And even within the domain of internet essay production, I think there are many more high-quality beige websites that should exist on a wide variety of topics, and I think the returns in terms of shifting public opinion and informing key decision-makers continue to be very high.

What do you get for donating to Lightcone Infrastructure?

The most important thing you get for donating to Lightcone are the fruits of our labor. A world (hopefully) slightly less likely to go off the rails. More smart people thinking openly and honestly about difficult topics. A civilization more on the ball of what matters in the world.

But, that's not the only thing! We try pretty hard to honor and credit the people whose financial contributions have made it possible for us to exist.

We do this in a few different ways:

If you donate more than $1,000 to us, we will send you a limited edition Lightcone donor t-shirt (if you fill out the form we send you). You can also claim an entry on the LessWrong donor leaderboard.

If you donate more than $2,000, you can get a personalized plaque on our campus.

If you donate more than $20,000, you can get a structure on campus, like one of our meeting rooms, a gazebo, or some kind of room named after you.

For even larger donations, you could have a whole lecture hall or area of campus named after you (like the Drethelin Gardens, dedicated to Drethelin as a result of a $150k donation to us).

And if you want any other kind of recognition, please reach out! It's pretty likely we can make something work.

Logistics of donating to Lightcone

We are a registered 501(c)3 nonprofit. If you are in the United States, the best way to donate cash, stock, or crypto is through every.org:

https://www.every.org/lightcone-infrastructure/f/2026-fundraiser

There are some Ok options for getting tax-deductibility in the UK. I am still working on some ways for people in other European countries to get tax-deductibility.[28]

If you are in the UK and want to donate a large amount, you can donate through NPT Transatlantic (fees are a flat fee of £2,000 on any donation less than £102,000). If you want to donate less, the Anglo-American Charity is a better option, their fee is 4% on donations below £15K minimum fee £250.

If there is enough interest, I can probably also set up equivalence determinations in most other countries that have a similar concept of tax-deductibility.

If you have any weirder assets that you can't donate through every.org, we can figure out some way of giving you a good donation receipt for that. Just reach out (via email, DM, or text/signal at +1 510 944 3235) and I will get back to you ASAP with the logistics. Last round we received a bunch of private company equity that I expect we will sell this year and benefit quite a bit from, while providing some healthy tax-deductibility for the donor.

Also, please check if your employer has a donation matching program! Many big companies double the donations made by their employees to nonprofits (for example, if you work at Google and donate to us, Google will match your donation up to $10k). Here is a quick list of organizations with matching programs I found, but I am sure there are many more. If you donate through one of these, please also send me an email so I can properly thank you and trace the source of the donation.

Every.org takes 1% of crypto donations through the platform, so if you want to donate a lot of crypto, or don't want your identity revealed to every.org, reach out to us and we can arrange a transfer.

Thank you

And that's it. If you are reading this, you have read (or at least scrolled past) more than 13,000 words full of detail about what we've done in the last year, what our deal is, and what will happen if you give us money.

I do really think that we are very likely the best option you have for turning money into better futures for humanity, and hopefully this post will have provided some evidence to help you evaluate that claim yourself.

I think we can pull the funds together to make sure that Lightcone Infrastructure gets to continue to exist. If you can and want to be a part of that, donate to us here. We need to raise ~$1.4M to survive the next 12 months, $2M to continue our operations as we have, and can productively use a lot of funding beyond that.

We built the website and branding, the AI Futures Team wrote the content and provided the data

The Survival and Flourishing Fund continues to recommend grants to us in a way that indicates their process rates us as highly cost-effective, and staff responsible for evaluating us at Coefficient Giving (previously Open Philanthropy) think it’s likely we continue to be highly cost-effective (but are unable to recommend grants to us due to political/reputational constraints of their funders). The EA Infrastructure Fund also recommended a grant to us, though is unlikely to do so going forward as their leadership is changing and is being integrated into the Centre for Effective Altruism.

Here are some individuals who have personally given to us or strongly recommended donations to us who others might consider well-informed about funding opportunities in this space: Zvi Mowshovitz, Scott Alexander, Ryan Greenblatt, Buck Shlegeris, Nate Soares, Eliezer Yudkowsky, Vitalik Buterin, Jed McCaleb, Emmett Shear, Richard Ngo, Daniel Kokotajlo, Steven Byrnes and William Saunders.

Part of that is around $300k of property tax, which we are currently applying for an exemption for. Property tax exemption applications seem to take around 2-3 years to get processed (and we filed ours a bit more than a year ago). If we get granted an exemption we will get any property tax we paid while the application was pending refunded, so if that happens the project will have basically broken fully even this year!

See last year's fundraiser post for more detail on our finances here.

The fundraising progress thermometer will include these matching funds. I also go into why it's 12.5%, which is a weirdly specific number, in the finances section later in the post.

To be clear, I do not expect this to continue to be the case for the coming decade. As the financial stakes grow larger, I expect discussion of these topics to start being dominated by ideas and memes that are more memetically viable. More optimized for fitness in the marketplace of ideas.

It gives a model of the AI Alignment problem that I think is hilariously over-optimistic. In the "Slowdown" scenario, humanity appears to suddenly end up with aligned Superintelligence in what reads to me as an extremely unrealistic way, and some of the forecasting work behind it is IMO based on confused semi-formal models that have become too complicated for even the authors to comprehend.

And to be clear, Lightcone did receive some payment for our work on AI 2027 from the AI Futures Team (around $100k). The rate we offered was not a sustainable rate for us and the time we spent cost us more in salaries than that. This low rate was the result of the AI Futures team at the time also being quite low on funding. The AI Futures team also has some of their own credit allocation estimates which put Lightcone closer to 15% instead of 30%.

And here Lightcone did receive a pretty substantial amount of money for our work on the project (around $200k).

Also legally we have some constraints on what we can work on as a 501(c)3, which generally cannot engage in lobbying for specific candidates. We were in the clear for this project since we didn't work on any of the content of the website, and were paid market rate for our work (which is higher than our rates for AI 2027 or If Anyone Builds It), but we would have entered dicey territory if we had been involved with the content.

Which to be clear, I can't really comprehensively argue for in this post

Unfortunately due to the rise of AI agents, Google Analytics has become a much worse judge of traffic statistics than it has been in previous years, so I think these specific numbers here are likely overestimating the increase by approximately a factor of 2.

As a quick rundown: Shane Legg is a Deepmind cofounder and early LessWrong poster directly crediting Eliezer for working on AGI. Demis has also frequently referenced LW ideas and presented at both FHI and the Singularity Summit. OpenAI's founding team and early employees were heavily influenced by LW ideas (and Ilya was at my CFAR workshop in 2015). Elon Musk has clearly read a bunch of LessWrong, and was strongly influenced by Superintelligence which itself was heavily influenced by LW. A substantial fraction of Anthropic's leadership team actively read and/or write on LessWrong.

For a year or two I maintained a simulated investment portfolio at investopedia.com/simulator/ with the primary investment thesis "whenever a LessWrong comment with investment advice gets over 40 karma, act on it". I made 80% returns over the first year (half of which was buying early shorts in the company "Nikola" after a user posted a critique of them on the site).

After loading up half of my portfolio on some option calls with expiration dates a few months into the future, I then forgot about it, only to come back to see all my options contracts expired and value-less, despite the sell-price at the expiration date being up 60%, wiping out most of my portfolio. This has taught me both that LW is amazing alpha for financial investment, and that I am not competent enough to invest on it (luckily other people have done reasonable things based on things said on LW and do now have a lot of money, so that's nice, and maybe they could even donate some back to us!)

Off-the-cuff top LW worldview predictions: AI big, prediction markets big, online communities big, academia much less important, crypto big, AI alignment is a real problem, politics is indeed the mindkiller, god is indeed not real, intelligence enhancement really important, COVID big

Off-the-cuff worst LW worldview predictions: Politics unimportant/can be ignored, neural nets not promising, we should solve alignment instead of trying to avoid building ASI for a long while, predicted COVID would spread much more uncontrollably than it did

In general, I am pretty sure LessWrong can handle many more good-faith submissions in terms of content right now, and trust the karma system to select the ones worth reading. HackerNews handles almost two orders of magnitude more submissions than we have in a day, and ends up handling it fine using the same fundamental algorithm.

The cost of Inkhaven is probably dominated by the opportunity cost of lost clients during the month of November. We managed to run one major unrelated conference on one weekend, but could've probably found at least a few other smaller events to make money from. Inkhaven itself brought $2k-$4k per resident, though around half of them required financial assistance; it also brought in ~$75k in sponsorships, and of course we had to pay a lot for staff fees. On net I think Inkhaven cost us around $50k primarily due to the opportunity cost of not renting out Lighthaven to someone else.

We deferred one of our annual $1M interest payments on Lighthaven from 2023 to 2024, since we were dealing with the big FTX-caused disruption to the whole funding ecosystem. This meant we were trying to fundraise for $3M last year to make both of our interest payments, but we only raised just above $2M by the end of our fundraiser.

Our backup plan was to take out a loan secured against a property we own right next to Lighthaven, which is worth around $1M. This would have been quite costly to us because commercial lenders really don't like loaning to nonprofits and the rates we were being offered were pretty bad. I am glad we didn't have to do it!

Our salary policy is roughly that people at Lightcone are paid approximately 70% of what I am quite confident they could make if they worked in industry instead of at Lightcone, though we have not increased salaries for quite a while and we have been drifting downwards from that. See here for last year's discussion of our salary policies.

And we did receive a grant from the EA Infrastructure Fund which I am really grateful for. I do think given upcoming change in leadership of the fund this is unlikely to repeat (as I expect the new leadership to be much more averse to reputational associations to us).

We have a 3% fee in one or two of our fiscal sponsorship agreements, but haven't actually collected it.

This table is not exhaustive and OpenPhil told us they chose organisations for inclusion partially dependent on who it happened to be easy to get budget data on. Also, we've removed one organization at their request (which also ranked worse than LessWrong 2.0).

The linked grant is ~6M over a bit more than 2 years, and there are a bunch of other grants that seem also to university groups, making my best guess around $5M/yr, but I might be off here.

Up until recently I was expecting a larger revenue increase in 2026 compared to 2025, but I now think this is less likely. The MATS program, which made up around $1.2M of our revenue while booking us for a total of 5 months for 2025, is moving to their own space for their 2025 summer program. This means we are dealing with a particularly large disruption to our venue availability.

While MATS makes up a lot of our annual revenue, the program's revenue per day tends to be lower than almost every other program we host. I expect that, in the long run, MATS leaving will increase the total revenue of the venue, but we likely won't be able to fully execute on that shift within 2026.

Ryan later donated an extra $30k on top of the $3,000 described in this comment.

I expect this willingness to spend to probably translate into only about 50% of this money actually going out, mostly because spinning up programs that will be able to distribute this much money will take a while, and even remotely-reasonable giving opportunities will dry up.